WiViK On-screen Keyboard v 3.2 win all serial key or number

WiViK On-screen Keyboard v 3.2 win all serial key or number

nvesinve

/thumb.jpg)

Easydvd.23 multilanguage descargar.womble easydvd v.26 multilanguage winall regged blizzard uploaded: by: scene4all.description: womble easydvd is a dvd authoring tool that makes dvd authoring as simple, quick, and intuitive as possible.wizard dvd.results of step 7 microwin v. Multilanguage download: free download software, free video dowloads, free music downloads, free movie downloads, games.download womble easydvd v.24 multilanguage by adrian dennis torrent from software category on.download the womble mpeg video wizard v 114 multilanguage winall regged blizzard torrent or choose other womble mpeg video wizard v 114.

Download womble easydvd v.24 multilanguage by adrian dennis.wivik on screen keyboard v 3.2 win all 07 34 wivik onscreen.download womble easydvd v 26 multilanguage winall regged blizzard torrent from software category on isohunt.womble easydvd.23 multilanguage descargar.womble easydvd v.26 multilanguage winall regged.wizard dvd v .convert womble easydvd v.26 trail version to full software.womble easydvd v 26 multilanguage winall regged blizzard.with a six step work flow, you will be able.womble mpeg video wizard dvd v multilanguage .womble mpeg video wizard dvd v 2 multilanguage winall regged blizzard posted.womble.

Womble.easydvd.v.26.multilanguage.winall.regged blizzard.womble easydvd v.26 multilanguage winall regged blizzard uploaded: by: scene4all.womble easydvd v. .womble.easydvd.v.multilanguage.winall.regged blizzard 18 torrent download locations.description: womble easydvd is a dvd authoring tool that makes dvd authoring as simple, quick, and intuitive as possible.multilanguage winall regged.with a six step work flow, you will be able to create your own dvd with just a few mouse clicks.womble easydvd v 26 multilanguage winall.create your own dvd in few easy steps with this great application released by team blizzard. As possible.

Multilanguage winall.torrent hash: b495adbdcadae3475db.womble easydvd .23 download from extabit, rapidshare, rapidgator and lumfile womble easydvd .23 netload, uploaded, jumbofiles, glumbouploads.womble easydvd v 26 multilanguage winall regged blizzard years 16 mb.license key: womble easydvd .womble easydvd v.26 serial numbers.womble easydvd v 25 cracked. Low prices.retail.ebook pdfwriters womble.easydvd.v.23.multilanguage.winall.womble easydvd.womble.easydvd.v.26.multilanguage.winall.regged blizzard 18 torrent download locations 1337x.to womble.easydvd.v.26.multilanguage.winall.regged blizzard apps pc software.womble easydvd .23 multilanguage descargar.womble btspread, torrent, magnet, btbtsowthe free online torrent file to magnet link conversion, magnet link to torrent file conversion, search magnet link and.womble.

Easydvd v 25 cracked.use the.womble easydvd v. 09 23.womble mpeg. Zip. Womble easydvd v1.womble easydvd v 25 cracked. Browse. Log in sign up.womble easydvd v.25 multilanguage winall regged blizzard.1 25. Thank you for choosing womble easydvd.womble easydvd is an easy to use dvdn wombleeasydvd125.exe and follow instructions.torrent hash: 32ace73badebccda.blizzard bring us newest version of womble easydvd, available for windows users. Enjoy. Description: womble easydvd is a dvd authoring software that makes dvd authoring as simple, quick, and intuitive as possible.windows.blizzard.details for this torrent.

With Womble easydvd v1.0.1.25 multilanguage winall regged blizzard often seekPopular Downloads:Adobe premierepro english 86x 64x crack works for a lifetimeAms photo collage creator 3.91 software serialNew windows 7 activator workingVegas movie studio hd platinum 11 0 247 keygen free downloadAvast permanent activator final valid until 2050Autocomplete

Autocomplete, or word completion, is a feature in which an application predicts the rest of a word a user is typing. In Android smartphones, this is called predictive text. In graphical user interfaces, users can typically press the tab key to accept a suggestion or the down arrow key to accept one of several.

Autocomplete speeds up human-computer interactions when it correctly predicts the word a user intends to enter after only a few characters have been typed into a text input field. It works best in domains with a limited number of possible words (such as in command line interpreters), when some words are much more common (such as when addressing an e-mail), or writing structured and predictable text (as in source code editors).

Many autocomplete algorithms learn new words after the user has written them a few times, and can suggest alternatives based on the learned habits of the individual user.

Definition[edit]

Original purpose[edit]

The original purpose of word prediction software was to help people with physical disabilities increase their typing speed,[1] as well as to help them decrease the number of keystrokes needed in order to complete a word or a sentence.[2] The need to increase speed is noted by the fact that people who use speech-generating devices generally produce speech at a rate that is less than 10% as fast as people who use oral speech.[3] But the function is also very useful for anybody who writes text, particularly people–such as medical doctors–who frequently use long, hard-to-spell terminology that may be technical or medical in nature.

Description[edit]

Autocomplete or word completion works so that when the writer writes the first letter or letters of a word, the program predicts one or more possible words as choices. If the word he intends to write is included in the list he can select it, for example by using the number keys. If the word that the user wants is not predicted, the writer must enter the next letter of the word. At this time, the word choice(s) is altered so that the words provided begin with the same letters as those that have been selected. When the word that the user wants appears it is selected, and the word is inserted into the text.[4][5] In another form of word prediction, words most likely to follow the just written one are predicted, based on recent word pairs used.[5] Word prediction uses language modeling, where within a set vocabulary the words are most likely to occur are calculated.[6] Along with language modeling, basic word prediction on AAC devices is often coupled with a frecency model, where words the AAC user has used recently and frequently are more likely to be predicted.[3] Word prediction software often also allows the user to enter their own words into the word prediction dictionaries either directly, or by "learning" words that have been written.[4][5] Some search returns related to genitals or other vulgar terms are often omitted from autocompletion technologies, as are morbid terms[7][8]

Standalone tools[edit]

There are standalone tools that add autocomplete functionality to existing applications. These programs monitor user keystrokes and suggests a list of words based on first typed letter(s). Examples are Typingaid and Letmetype.[9][10] LetMeType, freeware, is no longer developed, the author has published the source code and allows anybody to continue development. Typingaid, also freeware, is actively developed. Intellicomplete, both a freeware and payware version, works only in certain programs which hook into the intellicomplete server program.[11] Many Autocomplete programs can also be used to create a Shorthand list. The original autocomplete software was Smartype, which dates back to the late 1980s and is still available today. It was initially developed for medical transcriptionists working in WordPerfect for MS/DOS, but it now functions for any application in any Windows or Web-based program.

Shorthand[edit]

Shorthand, also called Autoreplace, is a related feature that involves automatic replacement of a particular string with another one, usually one that is longer and harder to type, such as "myname" with "Lee John Nikolai François Al Rahman". This can also quietly fix simple typing errors, such as turning "teh" into "the". Several Autocomplete programs, standalone or integrated in text editors, based on word lists, also include a shorthand function for often used phrases.

Context completion[edit]

Context completion is a text editor feature, similar to word completion, which completes words (or entire phrases) based on the current context and context of other similar words within the same document, or within some training data set. The main advantage of context completion is the ability to predict anticipated words more precisely and even with no initial letters. The main disadvantage is the need of a training data set, which is typically larger for context completion than for simpler word completion. Most common use of context completion is seen in advanced programming language editors and IDEs, where training data set is inherently available and context completion makes more sense to the user than broad word completion would.

Line completion is a type of context completion, first introduced by Juraj Simlovic in TED Notepad, in July 2006. The context in line completion is the current line, while current document poses as training data set. When user begins a line which starts with a frequently used phrase, the editor automatically completes it, up to the position where similar lines differ, or proposes a list of common continuations.

Action completion in applications are standalone tools that add autocomplete functionality to an existing applications or all existing applications of an OS, based on the current context. The main advantage of Action completion is the ability to predict anticipated actions. The main disadvantage is the need of a data set. Most common use of Action completion is seen in advanced programming language editors and IDEs. But there are also action completion tools that work globally, in parallel, across all applications of the entire PC without (very) hindering the action completion of the respective applications.

Usage by software[edit]

In web browsers[edit]

In web browsers, autocomplete is done in the address bar (using items from the browser's history) and in text boxes on frequently used pages, such as a search engine's search box. Autocomplete for web addresses is particularly convenient because the full addresses are often long and difficult to type correctly. HTML5 has an autocomplete form attribute.

In e-mail programs[edit]

In e-mail programs autocomplete is typically used to fill in the e-mail addresses of the intended recipients. Generally, there are a small number of frequently used e-mail addresses, hence it is relatively easy to use autocomplete to select among them. Like web addresses, e-mail addresses are often long, hence typing them completely is inconvenient.

For instance, MicrosoftOutlook Express will find addresses based on the name that is used in the address book. Google's Gmail will find addresses by any string that occurs in the address or stored name.

In search engines[edit]

In search engines, autocomplete user interface features provide users with suggested queries or results as they type their query in the search box. This is also commonly called autosuggest or incremental search. This type of search often relies on matching algorithms that forgive entry errors such as phonetic Soundex algorithms or the language independent Levenshtein algorithm. The challenge remains to search large indices or popular query lists in under a few milliseconds so that the user sees results pop up while typing.

Autocomplete can have an adverse effect on individuals and businesses when negative search terms are suggested when a search takes place. Autocomplete has now become a part of reputation management as companies linked to negative search terms such as scam, complaints and fraud seek to alter the results. Google in particular have listed some of the aspects that affect how their algorithm works, but this is an area that is open to manipulation.[12]

In source code editors[edit]

Autocomplete of source code is also known as code completion. In a source code editor autocomplete is greatly simplified by the regular structure of the programming languages. There are usually only a limited number of words meaningful in the current context or namespace, such as names of variables and functions. An example of code completion is Microsoft's IntelliSense design. It involves showing a pop-up list of possible completions for the current input prefix to allow the user to choose the right one. This is particularly useful in object-oriented programming because often the programmer will not know exactly what members a particular class has. Therefore, autocomplete then serves as a form of convenient documentation as well as an input method. Another beneficial feature of autocomplete for source code is that it encourages the programmers to use longer, more descriptive variable names incorporating both lower and upper case letters (CamelCase), hence making the source code more readable. Typing large words with many mixed cases like "numberOfWordsPerParagraph" can be difficult, but Autocomplete allows one to complete typing the word using a fraction of the keystrokes.

In database query tools[edit]

Autocompletion in database query tools allows the user to autocomplete the table names in an SQL statement and column names of the tables referenced in the SQL statement. As text is typed into the editor, the context of the cursor within the SQL statement provides an indication of whether the user needs a table completion or a table column completion. The table completion provides a list of tables available in the database server the user is connected to. The column completion provides a list of columns for only tables referenced in the SQL statement. SQL Server Management Studio provides autocomplete in query tools.[citation needed]

In word processors[edit]

In many word processing programs, autocompletion decreases the amount of time spent typing repetitive words and phrases. The source material for autocompletion is either gathered from the rest of the current document or from a list of common words defined by the user. Currently Apache OpenOffice, Calligra Suite, KOffice, LibreOffice and Microsoft Office include support for this kind of autocompletion, as do advanced text editors such as Emacs and Vim.

- Apache OpenOffice Writer and LibreOffice Writer have a working word completion program that proposes words previously typed in the text, rather than from the whole dictionary

- Microsoft Excel spreadsheet application has a working word completion program that proposes words previously typed in upper cells

In command-line interpreters[edit]

In a command-line interpreter, such as Unix's sh or bash, or Windows's cmd.exe or PowerShell, or in similar command line interfaces, autocomplete of command names and file names may be accomplished by keeping track of all the possible names of things the user may access. Here autocomplete is usually done by pressing the key after typing the first several letters of the word. For example, if the only file in the current directory that starts with x is xLongFileName, the user may prefer to type x and autocomplete to the complete name. If there were another file name or command starting with x in the same scope, the user would type more letters or press the Tab key repeatedly to select the appropriate text.

Efficiency[edit]

Parameters for efficiency[edit]

The efficiency of word completion is based on the average length of the words typed. If, for example, the text consists of programming languages which often have long multi-word names for variables, functions, or classes, completion is both useful and generally applied in editors specially geared towards programmer such as Vim.

In different languages, word lengths can differ dramatically. Picking up on the above example, a soccer player in German is translated as a "Fussballspieler", with a length of 15 characters. This example illustrates that English is not the most efficient language for WC; this study[13] shows an average length for English words in a corpus of over 100,000 words to be 8.93, for Czech to be 10.55 and for German to be 13.24. In addition, in some languages like German called fusional languages as well as agglutinative languages, words can be combined, creating even longer words.

Authors who often use very long words, like medical doctors and chemists, obviously have even more use for Autocomplete (Word completion) software than other authors.

Research[edit]

Although research has shown that word prediction software does decrease the number of keystrokes needed and improves the written productivity of children with disabilities,[1] there are mixed results as to whether or not word prediction actually increases speed of output.[14][15] It is thought that the reason why word prediction does not always increase the rate of text entry is because of the increased cognitive load and requirement to move eye gaze from the keyboard to the monitor.[1]

In order to reduce this cognitive load, parameters such as reducing the list to five likely words, and having a vertical layout of those words may be used.[1] The vertical layout is meant to keep head and eye movements to a minimum, and also gives additional visual cues because the word length becomes apparent.[16] Although many software developers believe that if the word prediction list follows the cursor, that this will reduce eye movements,[1] in a study of children with spina bifida by Tam, Reid, O'Keefe & Nauman (2002) it was shown that typing was more accurate, and that the children also preferred when the list appeared at the bottom edge of the screen, at the midline. Several studies have found that word prediction performance and satisfaction increases when the word list is closer to the keyboard, because of the decreased amount of eye-movements needed.[17]

Software with word prediction is produced by multiple manufacturers. The software can be bought as an add-on to common programs such as Microsoft Word (for example, WordQ+SpeakQ, Typing Assistant,[18] Co:Writer,[citation needed] Wivik,[citation needed] Ghotit Dyslexia),[citation needed] or as one of many features on an AAC device (PRC's Pathfinder,[citation needed] Dynavox Systems,[citation needed] Saltillo's ChatPC products[citation needed]). Some well known programs: Intellicomplete,[citation needed] which is available in both a freeware and a payware version, but works only with programs which are made to work with it. Letmetype[citation needed] and Typingaid[citation needed] are both freeware programs which work in any text editor.

An early version of autocompletion was described in 1967 by H. Christopher Longuet-Higgins in his Computer-Assisted Typewriter (CAT),[19] “such words as ‘BEGIN’ or ‘PROCEDURE’ or identifiers introduced by the programmer, would be automatically completed by the CAT after the programmer had typed only one or two symbols.”

See also[edit]

References[edit]

- ^ abcdeTam, Cynthia; Wells, David (2009). "Evaluating the Benefits of Displaying Word Prediction Lists on a Personal Digital Assistant at the Keyboard Level". Assistive Technology. 21 (3): 105–114. doi:10.1080/10400430903175473. PMID 19908678. S2CID 23183632.

- ^Anson, D., Moist, P., Przywara, M., Wells, H., Saylor, H. & Maxime, H. (2006). The Effects of Word Completion and Word Prediction on Typing Rates Using On-Screen Keyboards. Assistive Technology, 18, 146-154.

- ^ abTrnka, K., Yarrington, J.M. & McCoy, K.F. (2007). The Effects of Word Prediction on Communication Rate for AAC. Proceedings of NAACL HLT 2007, Companion Volume, 173-176.

- ^ abBeukelman, D.R. & Mirenda, P. (2008). Augmentative and Alternative Communication: Supporting Children and Adults with Complex Communication Needs. (3rd Ed.) Baltimore, MD: Brookes Publishing, p. 77.

- ^ abcWitten, I. H.; Darragh, John J. (1992). The reactive keyboard. Cambridge, UK: Cambridge University Press. pp. 43–44. ISBN .

- ^Jelinek, F. (1990). Self-Organized Language Modeling for Speech Recognition. In Waibel, A. & Kai-Fulee, Ed. Morgan, M.B. Readings in Speech Recognition (pp. 450). San Mateo, California: Morgan Kaufmann Publishers, Inc.

- ^Oster, Jan. "Communication, defamation and liability of intermediaries." Legal Studies 35.2 (2015): 348-368

- ^McCulloch, Gretchen (11 February 2019). "Autocomplete Presents the Best Version of You". Wired. Retrieved 11 February 2019.

- ^http://www.autohotkey.com/community/viewtopic.php?f=2&t=53630 TypingAid

- ^"Archived copy". Archived from the original on 2012-05-27. Retrieved 2012-05-09.CS1 maint: archived copy as title (link) LetMeType

- ^http://www.intellicomplete.com/ Autocomplete program with wordlist for medicine

- ^Davids, Neil (2015-06-03). "Changing Autocomplete Search Suggestions". Reputation Station. Retrieved 19 June 2015.

- ^[1]

- ^Dabbagh, H. H. & Damper, R. I. (1985). Average Selection Length and Time as Predictors of Communication Rate. Proceedings of the RESNA 1985 Annual Conference, RESNA Press, 104-106.

- ^Goodenough-Trepagnier, C., & Rosen, M.J. (1988). Predictive Assessment for Communication Aid Prescription: Motor-Determined Maximum Communication Rate. In L.E. Bernstein (Ed.), The vocally impaired: Clinical Practice and Research (pp. 165-185).Philadelphia: Grune & Stratton.; as cited in Tam & Wells (2009), pp. 105-114.

- ^Swiffin, A. L., Arnott, J. L., Pickering, J. A., & Newell, A. F. (1987). Adaptive and predictive techniques in a communication prosthesis. Augmentative and Alternative Communication, 3, 181–191; as cited in Tam & Wells (2009).

- ^Tam, C., Reid, D., Naumann, S., & O’ Keefe, B. (2002). Perceived benefits of word prediction intervention on written productivity in children with spina bifida and hydrocephalus. Occupational Therapy International, 9, 237–255; as cited in Tam & Wells (2009).

- ^http://www.prlog.org/10519217-typing-assistant-new-generation-of-word-prediction-software.html Typing Assistant

- ^Longuet-Higgins, H.C., Ortony, A., The Adaptive Memorization of Sequences, In Machine Intelligence 3, Proceedings of the Third Annual Machine Intelligence Workshop, University of Edinburgh, September 1967. 311-322, Publisher: Edinburgh University Press, 1968

External links[edit]

SAK: Scanning Ambiguous Keyboard for Efficient One-Key Text Entry

MacKenzie, I. S., & Felzer, T. (2010). SAK: Scanning ambiguous keyboard for efficient one-key text entry, ACM Transactions on Computer-Human Interaction, 17, 11:1-11:39. [PDF] [software]

I. Scott MacKenzie1 & Torsten Felzer2

1 Dept. of Computer Science and EngineeringYork University Toronto, Canada M3J 1P3

mack@cse.yorku.ca

2 Darmstadt University of Technology

Institute for Mechatronic Systems in Mechanical Engineering

Petersenstr. 30

D-64287 Darmstadt Germany

felzer@ims.tu-darmstadt.de

AbstractA core human need is to communicate. Whether through speech, text, sound, or even body movement, our ability to communicate by transmitting and receiving information is highly linked to our quality of life, social fabric, and other facets of the human condition. Text, written text, is one of the most common forms of communication. Today, the Internet, mobile phones, computers, and other electronic communicating devices provide the means for hundreds of millions of people to communicate daily using written text. The text in their correspondences is typically created using a keyboard and viewed on a screen.

The design and evaluation of a scanning ambiguous keyboard (SAK) is presented. SAK combines the most demanding requirement of a scanning keyboard – input using one key or switch – with the most appealing feature of an ambiguous keyboard – one key press per letter. The optimal design requires just 1.713 scan steps per character for English text entry. In a provisional evaluation, 12 able-bodied participants each entered 5 blocks of text with the scanning interval decreasing from 1100 ms initially to 700 ms at the end. The average text entry rate in the 5th block was 5.11 wpm with 99% accuracy. One participant performed an additional five blocks of trials and reached an average speed of 9.28 wpm on the 10th block. Afterwards, the usefulness of the approach for persons with severe physical disabilities was shown in a case study with a software implementation of the idea explicitly adapted for that target community.Categories and Subject Descriptors: H.5.2 [Information Interfaces and Presentation]: User Interfaces – input devices and strategies (e.g., mouse, touchscreen); K.4.2 [Computers and Society]: Social Issues – assistive technologies for persons with disabilities; K.8.1 [Personal Computing]: Application Packages– word processing

General Terms: Design, Experimentation, Human Factors

Keywords and Phrases: Text entry, Keyboards, Ambiguous keyboards, Scanning keyboards, Mobile computing, Intentional muscle contractions, Assistive technologies

While the ubiquitous Qwerty keyboard is the standard for desktop and laptop computers, many people use other devices for text entry. People "on the move" are bound to their mobile phones and use them frequently to transmit and receive SMS text messages, or even email. Of the estimated three billion or more text messages sent each day,1 most are entered using the conventional mobile phone keypad. The mobile phone keypad is an example of an "ambiguous keyboard" because multiple letters are grouped on each key. Nevertheless, with the help of some sophisticated software and a built-in dictionary, entry is possible using one keystroke per character.

People with severe disabilities are often impaired in their ability to communicate, for example, using speech. For these individuals, there is a long history of supplanting speech communication with augmentative and alternative communication (AAC) aids. Depending on the disability and the form of communication desired, the aids often involve a sophisticated combining of symbols, phonemes, and other communication elements to form words or phrases (Brandenberg & Vanderheiden, 1988; Hochstein & McDaniel, 2004). Computer access for users with a motor impairment may preclude use of a physical keyboard, such as a Qwerty keyboard or a mobile phone keypad, or operation of a computer mouse. In such cases, the user may be constrained to providing computer input using a single button, key, or switch. To the extent there is a keyboard, it is likely a visual representation on a display, rather than a set of physical keys. Letters, or groups of letters, on the visual keyboard are highlighted sequentially ("scanned") with entry using a series of selections – all using just one switch – to narrow in on and select the desired letter. This is the essence of a "scanning keyboard".

In this paper, we present SAK, a scanning ambiguous keyboard. SAK combines the best of scanning keyboards and ambiguous keyboards. SAK designs use just a single key or switch (like scanning keyboards) and allow text to be entered using a single selection per character (like ambiguous keyboards). The result is an efficient one-key text entry method.

The rest of this paper is organized as follows. First, we summarize the operation of scanning keyboards and ambiguous keyboards. Then, we detail the SAK concept, giving the central operating details. Following this, a model is developed to explore the SAK design space. The model allows alternative designs to be quantified and compared. With this information we present the initial SAK design chosen for "test of concept" evaluation. Our methodology is described with results given and discussed. Following this, a full implementation is presented along with a case study of its use with a member of the target community. Concluding remarks summarize the contribution and identify issues for further research.

1.1 Scanning Keyboards

The idea behind scanning keyboards is to combine a visual keyboard with a single key, button, or switch for input. The keyboard is divided into selectable regions which are sequentially highlighted, or scanned. Scanning is typically automatic, controlled by a software timer, but self-paced scanning is also possible. In this case, the highlighted region is advanced as triggered by an explicit user action (e.g., Felzer, Strah, & Nordmann, 2008).

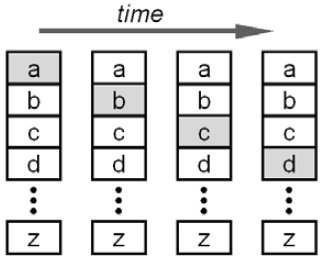

When the region containing the desired character is highlighted, the user selects it by activating the input switch. The general idea is shown in Figure 1a. Obviously, the rate of scanning is important, since the user must press and release the switch within the scanning interval for a highlighted region. Scan step intervals in the literature range from 0.3 seconds (Miró & Bernabeu, 2008) to about 5 seconds.

(a)

(b)

Figure 1. Scanning keyboard concept. (a) linear scanning (b) row-column scanning

Regardless of the scanning interval, the linear scanning sequence in Figure 1a is slow. For example, it takes 26 scan steps to reach "z". To address this, scanning keyboards typically employ some form of multi-level, or divide-and-conquer, scanning. Figure 1b shows row-column scanning. Scanning proceeds row-to-row. When the row containing the desired letter is highlighted, it is selected. Scanning then enters the row and proceeds left to right within the row. When the desired letter is highlighted, it is selected. Clearly, this is an improvement. The letter "j", for example, is selected in 5 scan steps in Figure 1b: 3 row scans + 2 column scans. Bear in mind that while row-column scanning reduces the scan steps, it increases the number of motor responses. The latter may be a limiting factor for some users.

When a letter is selected, scanning reverts to the home position to begin the next scan sequence, row to row, and so on. This behaviour is a necessary by-product of row-column selection, since continuing the scan at the next letter negates the performance advantage of two-tier selection.

Row-column scanning is also slow. To speed-up entry, numerous techniques have been investigated. These fall into several categories, including the use of different letter or row-column arrangements, word or phrase prediction, and adjusting the scanning interval.2

The most obvious improvement for row-column scanning is to rearrange letters by placing frequent letters near the beginning of the scan sequence, such as in the first row or in the first position in a column. There are dozens of research papers investigating this idea, many dating to the 1970s or 1980s (e.g., Damper, 1984; Doubler, Childress, & Strysik, 1978; Heckathorne & Childress, 1983, June; Treviranus & Tannock, 1987; Vanderheiden, Volk, & Geisler, 1974). Some of the more recent efforts are hereby cited (Baljko & Tam, 2006; Jones, 1998; Lesher, Moulton, & Higginbotham, 1998; Lin, Wu, Chen, Yeh, & Wang, 2008; Steriadis & Constantinou, 2003; Venkatagiri, 1999). Dynamic techniques have also been tried, whereby the position of letters varies depending on previous letters entered and the statistical properties of the language (Lesher et al., 1998; Miró & Bernabeu, 2008; Wandmacher, Antoine, Poirier, & Depart, 2008).

A performance improvement may also emerge using a 3-level or higher selection scheme, also known as block, group, or quadrant scanning (Bhattacharya, Samanta, & Basu, 2008a, 2008b; Felzer & Rinderknecht, 2009; Lin, Chen, Wu, Yeh, & Wang, 2007). The general idea is to scan through a block of items (perhaps a group of rows). The first selection enters a block. Scanning then proceeds among smaller blocks within the selected block. The second selection enters one of the smaller blocks and the third selection chooses an item within that block. Much like nested menus in graphical user interfaces, there is a tradeoff between the number of levels to traverse and the number of items to traverse in each level. Block scanning is most useful to provide access to a large number of items (Bhattacharya et al., 2008a; Shein, 1997). Regardless of the scanning organization, the goal is usually to reduce the total number of scan steps to reach the desired character.

Reducing the scanning interval is another way to increase the text entry rate, but this comes at the cost of higher error rates or missed opportunities for selection. Furthermore, users with reduced motor facility are often simply not able to work with a short scanning interval. One possibility is to dynamically adjust the system's scanning interval. Decisions to increase or decrease the scanning interval can be based on previous user performance, including text entry throughput, error rate, or reaction time (Lesher, Higginbotham, & Moulton, 2000; Lesher, Moulton, Higginbotham, & Brenna, 2002; Simpson & Koester, 1999).

Word or phrase prediction or completion is also widely used to improve text entry throughput (Jones, 1998; Miró & Bernabeu, 2008; Shein et al., 1991; Trnka, McCaw, Yarrington, McCoy, & Pennington, 2009). As a word is entered, the current word stem is used to build a list of matching complete words. The list is displayed in a dedicated region of the keyboard with a mechanism provided to select the word early.

We should be clear that all the techniques just described are variations on "divide and conquer". For any given entry, the first selection chooses a group of items and the next selection chooses within the group. SAK is different. Although SAK designs use scanning, each letter is chosen with a single selection. In this sense, SAK designs are more like ambiguous keyboards.

1.2 Ambiguous Keyboards

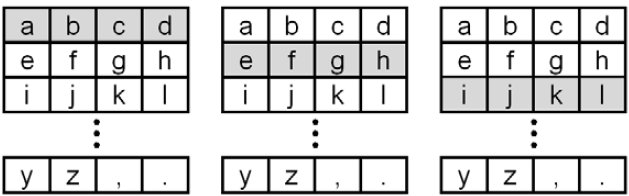

Ambiguous keyboards are arrangements of keys, whether physical or virtual, with more than one letter per key. The most obvious example is the phone keypad which positions the 26 letters of the English alphabet across eight keys (see Figure 2a).

(a)

(b)

Figure 2. Examples of ambiguous keyboards (a) phone keypad (b) research prototype with 26 letters on 3 keys (from Harbusch & Kühn, 2003a)

From as early as the 1970s it was recognized that the phone keypad could be used to enter text with one key press per letter (Smith & Goodwin, 1971). Today, the idea is most closely associated with "T9", the "text on 9 keys" technology developed and licensed by Tegic Communications and widely used on mobile phones.3 Due to the inherent ambiguity of a key press, a built-in dictionary is required to map key sequences to matching words. If multiple words match the key sequence, they are offered to the user as a list, ordered by decreasing likelihood. Interestingly enough, for English text entry on a phone keypad, there is very little overhead in accessing ambiguous words. One estimate is that 95% of words in English can be entered unambiguously using a phone keypad (Silfverberg, MacKenzie, & Korhonen, 2000).

There is a substantial body of research on ambiguous keyboards. As noted in a recent survey (MacKenzie & Tanaka-Ishii, 2007), the goal is usually to employ fewer keys or to redistribute letters on the keys to reduce the ambiguity. One such example is shown in Figure 2b where 26 letters are positioned on just three keys (Harbusch & Kühn, 2003b). Clearly, this design yields greater ambiguity. The unusual letter groupings are an effort to reduce the ambiguity by exploiting the statistical properties of the language.

All ambiguous keyboards in use involve either multiple physical keys or multiple virtual buttons that are randomly accessed ("pressed") by a finger or stylus, or clicked on using the mouse pointer. In 1998, Tegic Communications' Kushler noted the possibility of combining scanning with a phone-like ambiguous keyboard (Kushler, 1998). However, the idea was not developed with reduced-key configurations, nor was a system implemented or tested. There are at least two examples in the literature that come close to the SAK designs discussed here, but both involve more than one physical button. Harbusch and Kühn (2003a) describe a method using two physical buttons to "step scan" through an ambiguous keyboard. One button advances the focus point while a separate physical button selects virtual letter keys. Venkatagiri (1999) proposed two virtual ambiguous keyboards with scanning using either three or four letters per key. Separate physical buttons were proposed to explicitly choose the intended letter on a selected key. Both papers just cited present models only. No user studies were performed, nor were the designs actually implemented.

By adding scanning to an ambiguous keyboard with the one-switch constraint, we arrive at an interesting juncture in the design space. A "scanning ambiguous keyboard" (SAK) is a keyboard with scanned but static keys that combines the most demanding requirement of a scanning keyboard – input using one key or switch – with the most appealing feature of an ambiguous keyboard – one key press per letter. However, putting the pieces together to arrive at a viable, perhaps optimal, design requires further analysis. We need a way to characterize and quantify design alternatives to tease out strengths and weaknesses. For this, a model is required.

A model is a simplification of reality. Just as architects build models to explore design issues in advance of constructing a building, HCI researchers build interaction models to explore design scenarios in advance of prototyping and testing. In this section, we develop a model for scanning ambiguous keyboards. The model includes the following components:

- Keyboard layout and scanning pattern

- Dictionary (words and word frequencies)

- Interaction methods

- Measures to characterize scanning keyboards

2.1 Keyboard Layout and Scanning Pattern

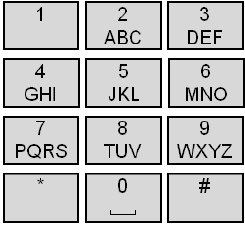

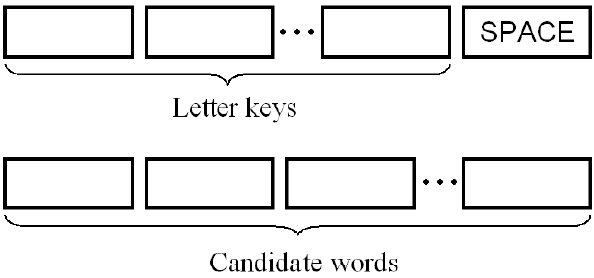

Figure 3 shows the general idea for a scanning ambiguous keyboard. Of course, the keyboard is virtual in that it is displayed on a screen, rather than implemented as physical keys. Physical input uses a single key, button, or switch. The keyboard includes two regions: a letter-selection region (top) and a word-selection region (bottom). The design is ambiguous, meaning letters are distributed over a small number of keys, with multiple letters per key.

Figure 3. Scanning ambiguous keyboard concept. There is a letter-selection region (top) and a word-selection region (bottom).

Scanning begins in the letter-selection region, proceeding left-to-right, repeating. Activating the physical key when the desired letter key is highlighted makes a selection. There is only one selection per letter.

One novel feature of SAK designs is that focus does not "snap to home" upon selecting a letter. Rather, focus proceeds in a cyclic pattern, advancing to the next key with each selection. Since SAK designs do not use multi-tier selection, there is no need to revert to a higher tier (e.g., the first row) after a selection within a row. Arguably, the cyclic scanning pattern used in SAK designs is intuitive and natural, since it does not involve transitions between, for example, a top tier and a bottom tier. However, cyclic scanning is predicated on using an alphabetic letter ordering, where there is no advantage in returning to a home position. So, there are design issues to consider in choosing a cyclic vs. snap-to-home scanning pattern. The SAK designs considered here assume a cyclic scanning pattern.

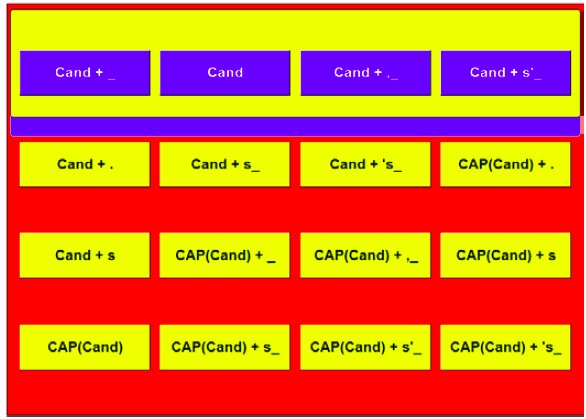

After a word is fully entered (or partly entered, see below), the SPACE key is selected. Scanning switches to the word-selection region, whereupon the desired word is selected when highlighted. A SPACE character is automatically appended. After word selection, scanning reverts to the letter-selection region for input of the next word.

The word-selection region contains a list of candidate words, drawn from the system's dictionary, and ordered by their frequency in a language corpus. The list is updated with each selection in the letter-selection region based on the current stem. The candidate list is organized in two parts. The first presents words exactly matching the current key sequence. The second presents extended words, where the current key sequence is treated as the word stem.

The inherent ambiguity of letter selection means the list size is often >1, even if the full word is entered. The words are ordered by decreasing probability in the language; so, hopefully, the desired word is at or near the front of the list. An example is given shortly.

2.2 Dictionary

Systems with ambiguous keyboards require a built-in dictionary to disambiguate key presses. The dictionary is a list of words and word frequencies derived from a corpus. The present research used a version of the British National Corpus (ftp://ftp.itri.bton.ac.uk/) containing about 68,000,000 total words. From this, a list of unique words and their frequencies was compiled and from this list the top 9,022 words were used for our dictionary.

2.2.1 Non-Dictionary Words

The core design of SAK only works with dictionary words. Of course, a mechanism must be available to enter non-dictionary words (which, presumably, are then added to the dictionary). Most scanning keyboards operate with a basic text entry mode, but implement some form of "escape mechanism" or "mode switch" to enter other modes. Additional modes are necessary for a variety of purposes, such as correcting errors, adding punctuation characters or special symbols, selecting predicted words or phrases, changing the system's configuration parameters, or switching to other applications.

There are at least three commonly used mechanisms for mode switching with scanning keyboards: (i) using an additional input switch (Baljko & Tam, 2006; Shein, Galvin, Hamann, & Treviranus, 1994), (ii) using a dedicated "mode" key on the virtual keyboard (Bhattacharya et al., 2008b; Jones, 1998), or (iii) pressing and holding the primary input switch for an extended period of time, say, 2-3 seconds (Jones, 1998; Miró & Bernabeu, 2008). A desirable feature of SAK is to maintain a short scanning sequence in the letter-selection region; so, adding even one additional virtual key is not considered a viable option, because of the impact on text entry throughput. An example method for mode switching with SAK designs is given in Section 5 (see "Escape Mode" on p. 33).

Returning to the central idea of SAK, the system works with words and word frequencies, but also requires a keystroke rendering of each word to determine the candidate words for each key sequence. An example will help.

2.2.2 Example

Let's assume – arbitrarily for the moment – that we're interested in a SAK design using four letter keys with letters distributed as in Figure 4.

Figure 4. Example letter-selection region with letters on four virtual keys

If the user wished to enter "sword", the key selections are 34331. As each letter is (ambiguously) selected in the letter-selection region, a candidate list is produced in the word-selection region. Figure 5 gives the general idea of how the list progresses as entry proceeds. (The example shows only the first five words in the candidate list. In practice, a larger candidate list is needed for highly ambiguous keyboards.) The desired word (underlined) appears in the fourth position with the 4th keystroke and in the first position with the 5th keystroke.

| Keys | Candidate Words |

|---|---|

| 3 | the of to on that |

| 34 | tv oz ux two own |

| 343 | two system systems type types |

| 3433 | system systems systematic sword systematically |

| 34331 | sword system systems systematic systematically |

One interaction strategy to consider is selecting a word early, after the 4th keystroke in the example. There may be a performance benefit, but this will depend on the keyboard layout and scanning pattern, which in turn determines the number of scan steps required for alternative strategies. Note in Figure 5, that "the" appears at the front of the candidate list after the first keystroke. So, "the" can be entered with two selections in the letter-selection region (3, SPACE) followed immediately by selecting the first candidate in the word-selection region. Here, there is clearly a performance benefit.

2.3 Interaction Methods

The discussion above suggests that SAK designs offer users choices in the way they enter text. To facilitate our goal of deriving a model, four interaction methods are proposed.

2.3.1 OLPS – One-Letter-Per-Scan Method

With the OLPS method, users select one letter per scan sequence. The example layout in Figure 4 has five keys (4 letter keys, plus SPACE) therefore five scan steps are required for each letter in a word. Four scan steps are passive, while one is active – a user selection on the key bearing the desired letter.

2.3.2 MLPS – Multiple-Letter-Per-Scan Method

With the MLPS method, users take the opportunity to select multiple letters per scan sequence, depending on the word. For the layout in Figure 4, the word "city" has keystrokes 1234 – no need to wait for passive scan steps between the letters. Four successive selections, followed by a fifth selection on SPACE, followed immediately by selecting "city" in the candidate list (it will be the first entry) and the word is entered. For other words, such as "zone" (4321), the MLPS opportunity does not arise.

2.3.3 DLPK – Double-Letter-Per-Key Method

With the DLPK method, users may make double selections in a single scan step interval if two letters are on the same key. Using our example layout, "into" has keystrokes 2233. Considering the five scan steps traversing the keys in Figure 4, the DLPK method for "into" requires just a single pass through the scanning sequence: pause, select-select (22), select-select (33), pause, select (SPACE), select ("into"). The utility of the DLPK method will depend on the scanning interval and on the user's ability to make two selections within the scanning interval.

2.3.4 OW – Optimized-Word Method

With the OW method, users seize opportunities to optimize by making an early selection if the desired word appears in the candidate list before all letters are entered and if there is a performance benefit over the alternative strategy of simply continuing to select keys until all letters are entered. An extreme example was noted above for "the" – the most common word in English. As it turns out, the OW method offers a performance benefit for many common words in English. Referring again to Figure 5, "of", "to", "on", and "that" – all very common words – appear with the first key selection. Bear in mind that the cost of not performing early selection is at least one more pass through the letter-selection scanning sequence.

The interaction methods just described are progressive in that the first method is easiest to use, but slow. The last method is hardest to use, but fast. Users need not commit to any one method. The methods are simply ways to characterize the sort of interaction behaviours users are likely to exhibit. Most likely, users will mix the methods, but, with experience, will migrate to behaviours producing performance benefits.

We are in a position now to consider design alternatives to the "arbitrary" letter assignment in Figure 4. There is clearly a tradeoff between having fewer keys with more letters/key and having more keys with fewer letters/key. With fewer keys, the number of scan steps is reduced but longer candidate lists are produced. With more keys, the number of scan steps is increased while shortening the candidate lists. However, one component in our model is still missing. We need a way to characterize and quantify scanning ambiguous keyboards of the sort described here. We need a measure that can be calculated for a variety of design and interaction scenarios and that can be used to make informed comparisons and choices between alternative designs.

2.4 Characteristic and Performance Measures

Measures for text entry are of two types: characteristic measures and performance measures. Characteristic measures describe a text entry method without measuring users' actual use of the method. They tend to be theoretical – describing and characterizing a method under circumstances defined a priori. Performance measures capture and quantify users' proficiency in using a method. Often, a measure can be both. For example, text entry speed, in words per minute, may be calculated based on a defined model of interaction and then measured later with users. In this section we present three new measures for text entry with scanning keyboards. Two are characteristic measures, but can be measured as well; one is a performance measure.

First we mention KSPC, for "keystrokes per character", as a characteristic measure applicable to numerous text entry methods. KSPC is the number of keystrokes required, on average, to generate a character of text for a given text entry technique in a given language (MacKenzie, 2002a; Rau & Skiena, 1994). For conventional text entry using a Qwerty keyboard, KSPC = 1 since each keystroke generates a character. However, other keyboards and methods yield KSPC < 1 or KSPC > 1. Word completion or word prediction techniques tend to push KSPC down, because they allow entry of words or phrases using fewer keystrokes than characters. On the other hand, ambiguous keyboards, such as a mobile phone keypad, tend to push KSPC up. For example, a phone keypad used for English text entry has KSPC ≈ 2.03 when using the multitap input method or KSPC ≈ 1.01 when using dictionary-based disambiguation (MacKenzie, 2002a).

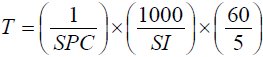

2.4.1 Scan Steps Per Character (SPC)

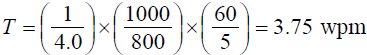

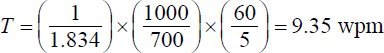

"Scan steps per character" (SPC) is proposed here as a characteristic measure for scanning keyboards. SPC is similar to KSPC. SPC is the number of scan steps, on average, to enter a character of text using a given scanning keyboard in a given language. SPC includes both passive scan steps (no user action) and active scan steps (user selection). A feature of SPC is that it directly maps to text entry throughput, T, in words per minute, given a scanning interval, SI, in milliseconds:

| (1) |

The first term converts "scan steps per character" into "characters per scan step". Multiplying by the second term yields "characters per second" and by the third term "words per minute".4 For example, if the scanning interval is, say, 800 ms, and SPC = 4.0, then

| (2) |

Of course, this assumes the user "keeps up" – performs selections according to the interaction method used in the SPC calculation.

Figure 6 demonstrates the scan step sequences for entering "computer" (13233313) using each of the four interaction methods described above. Again, Figure 4 serves as the example design. The scan step sequence (2nd column) shows a lowercase letter for selecting a key bearing the indicated letter (or sometimes a double selection for the DLPK and OW methods). A period (".") is a passive scan step, or a scan step interval without a selection. "S" is a selection on the SPACE key and "W" is a word-selection. The scan count is simply the number of scan steps. SPC is the scan count divided by nine, the number of characters in "computer" +1 for a terminating SPACE. (SPS is discussed in the next section.)

| Method | Scan Step Sequence | Scan Count | SPC | SPS |

|---|---|---|---|---|

| OLPS | 41 | 4.56 | 0.238 | |

| MLPS | 26 | 2.89 | 0.385 | |

| DLPK | 21 | 2.33 | 0.476 | |

| OW | 14 | 1.56 | 0.500 |

The figure demonstrates a progressive reduction in SPC as the interaction method becomes more sophisticated. With the OLPS method, only one letter is selected per scan step. Since "computer" has eight letters, 8 × 5 = 40 scan steps are required, plus a final active scan step to select the word in the candidate list. As word selection ("W") immediately follows SPACE ("S"), evidently the word was at the front of the candidate list. For the MLPS method, "o", "p", and "r" are entered in the same scan sequence as the preceding letter, thus saving 3 × 5 = 15 scan steps. The DLPK method improves on this by combining "p" and "u" in a double-selection, since they are on the same key. (Only the first letter, "p", is shown in the figure.) Five scan steps are saved.

A further improvement is afforded by the OW method. After double selecting "pu", "computer" appears in the candidate list in the fourth position. The opportunity is taken, producing a further savings of 7 scan steps. As an aside, the words preceding "computer" in the candidate list at this point were "bonus", "donor", and "control" (not shown). Because "bonus" and "donor" are exact matches with the key sequence 13233, they are at the front of the list. "control", "computer", and a few other words, follow as possible extended words matching the current numeric word stem. They are ordered by their frequencies in the dictionary.

2.4.2 Selections Per Scan Step (SPS)

Although reducing scan steps per character (SPC) is an admirable pursuit, there is a downside. To capture this, we introduce SPS, for "selections per scan step". SPS is the number of selections divided by the total number of scan steps. It can be computed for individual words or phrases, or as an overall weighted average for a given scanning keyboard, interaction method, and language.

As seen in the two right-hand columns of Figure 6, as SPC decreases, SPS increases. SPS captures, to some extent, the cognitive or motor demand on users. While passive scan steps may seem like a waste, they offer users valuable time to rest or to think through the spelling and interactions necessary to convey their message. Although this is a moot point for highly inefficient scanning methods, as depicted in Figure 1, it becomes relevant as more ambitious designs are considered – designs that push SPC down. With the OW method in Figure 6, SPS = 0.500. One selection for every two scan steps may not seem like much; however, if taking opportunities to quicken interaction involves, for example, viewing a list of candidate words, then cognitive demand may be substantial.

2.4.3 Scanning Efficiency (SI)

The OW scan sequence for "computer" in Figure 6 represents the absolute minimum number of scan steps for which this word can be entered. This is true according to the embedded dictionary and the defined layout and behaviour of the example SAK. The other scan sequences represent less efficient entry. The distinction is important when considering overall performance or when analyzing user behaviour and learning patterns. For example, the phrase "the quick brown fox jumps over the lazy dog" requires only 92 scan steps if all opportunities to optimize are taken. Will users actually demonstrate this behaviour? Probably not. Will they eventually, or occasionally, demonstrate this behaviour as expertise evolves? Perhaps.

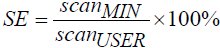

To capture user performance while using scanning keyboards, we introduce Scanning Efficiency (SE) as a human performance measure:

| (3) |

For example, if a user was observed to enter the quick-brown-fox phrase in, say, 108 scan steps, then

| (4) |

As expertise develops and performance improves toward optimal behaviour, SE will increase toward 100%. SE can be computed for the entry of single words, entire phrases, or as an overall human performance measure of user efficiency while using a scanning keyboard for text entry.

2.5 Searching for an Optimal Scanning Ambiguous Keyboard

Given the components of the model described above, software tools were built to search for an optimal scanning ambiguous keyboard. "Optimal", we define as a design that minimizes scan steps per character (SPC). Whether the scanning interval is 1 second or 5 seconds, a design with a lower SPC will produce a higher text entry throughput than a design with a higher SPC, all else being equal.

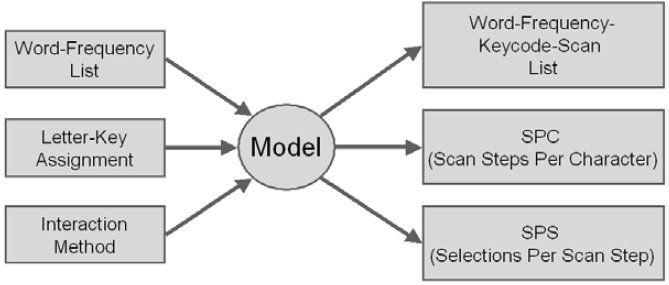

Figure 7 illustrates the general operation of the model. Three inputs are required: a dictionary in the form of a word-frequency list, a letter-key assignment (e.g., as per Figure 4), and a specification of the interaction method. The interaction method is OLPS (one letter per scan), MLPS (multiple letters per scan), DLPK (double letters per key), or OW (optimized word), as discussed earlier. For the dictionary, the 9,022-word list noted earlier was used. The letter-key assignment is used, among other things, to develop numeric keycodes for words in the dictionary. These are added to the word-frequency list to form a word-frequency-keycode list.

Figure 7. Modeling tool for scanning ambiguous keyboards.

Given the word-frequency-keycode list, a scan step sequence is built for every word in the dictionary for the specified input method. The result is a word-frequency-keycode-scan list. The scan steps for "computer" were given earlier for all four methods (see Figure 6). Generating the candidate list for the OW method is somewhat involved, due to ambiguity in the keycodes. The list must be built after every key selection. If the word appears in the list, a decision is required on whether to choose early selection, if there is a performance benefit, or to continue with the next selection. From the word-frequency-keycode-scan list, SPC and SPS are then computed as a weighted average over the entire dictionary. The process is then repeated for other letter-key assignments.

The calculations described above are but one part of a complex design space. Although the model is highly flexible, the search was constrained to designs placing letters on 2, 3, 4, 5, or 6 keys. These designs span a range that avoids absurdly long candidate lists (e.g., a 1-key design) while maintaining a reasonably small number of scan steps across the letter-selection region.

The search was further constrained to alphabetic letter arrangements only. Relaxing this constraint not only causes an explosion in the number of alternative designs, it also produces designs that increase the cognitive demand on users who must confront an unfamiliar letter arrangement. While designs with optimized letter arrangements often yield good predictions "on paper", they typically fail to yield performance benefits for users (e.g., Baljko & Tam, 2006; Bellman & MacKenzie, 1998; MacKenzie, 2002b; Miró & Bernabeu, 2008; Pavlovych & Stuerzlinger, 2003; Ryu & Cruz, 2005).

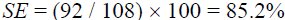

Even with the constraints described above, the search space is substantial because of the number of ways letters may be assigned across keys. In particular, if n letters are assigned in alphabetic order across m keys, the number of assignments (N) is

| (5) |

For 26 letters assigned across 2, 3, 4, 5, or 6 keys, the number of letter-key assignments is 25, 300, 2300, 12650, and 53130, respectively.

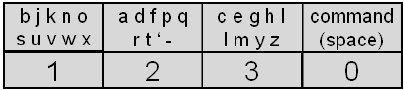

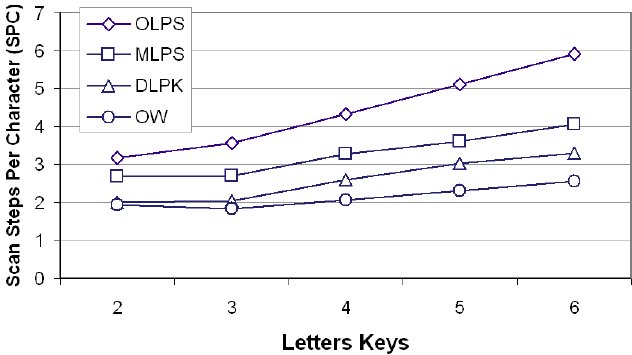

An exhaustive search was undertaken to find the letter-key assignment generating the lowest SPC for each interaction method (OLPS, MLPS, DLPK, OW) for alphabetic assignments over 2, 3, 4, 5, and 6 keys. The results are shown in Figure 8. The range is from SPC = 5.91, using the OLPS method with six letter keys, down to SPC = 1.834, using the OW method with three letter keys.

Figure 8. Minimized scan steps per character (SPC) for the OLPS, MLPS, DLPK, and OW interaction methods for designs with 2 through 6 letter keys.

Note that for each column in Figure 8, the letter-key assignment yielding the lowest SPC was slightly different among the input methods. Nevertheless, there was a coalescing of results: Designs generating the lowest, or near lowest, SPC for one interaction method faired similarly for the other methods. Figure 9 gives the chosen optimal design for each size. The assignment chosen in each case was based on the OW method. The SPC and SPS statistics are also provided.

| Letter Keys | Letter-Key Assignment | SPC | SPS |

|---|---|---|---|

| 6 | abcdefgh-ijkl-mno-pqr-stu-vwxyz | 2.551 | 0.3521 |

| 5 | abcdefgh-ijklm-nopqr-stu-vwxyz | 2.299 | 0.4072 |

| 4 | abcdefgh-ijklm-nopqr-stuvwxyz | 2.066 | 0.4771 |

| 3 | abcdefgh-ijklmnop-qrstuvwxyz | 1.834 | 0.5765 |

| 2 | abcdefghijklm-nopqrstuvwxyz | 1.927 | 0.5830 |

SPC decreases reading down the table, but experiences an increase at two letter keys. This is due to the increased ambiguity of placing 26 letters on two keys. The result is longer candidate lists with more passive scan steps required to reach the desired words. The similarity in the designs is interesting. For designs with 3 through 6 letter keys, the first break is after "h", and for designs 4 and 5 the next two breaks are after "m" and "r". This is likely due to the performance benefits in selecting or double selecting letters early in the scanning sequence, to avoid an additional pass if possible.

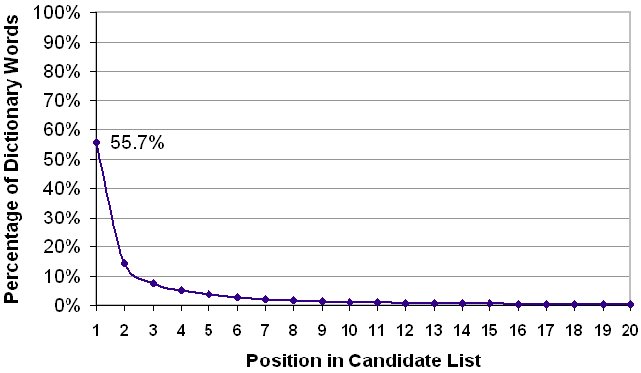

2.5.1 Ambiguity Analysis

The specter of highly ambiguous words and long candidate lists is unavoidable with ambiguous keyboards, particularly if letters are positioned on just a few keys. The SPC calculation described above accounts for this, but does so only as an overall average for text entry using a particular letter-key assignment and the built-in dictionary. While SPC < 2 is remarkably low, we are still left wondering: How long are the candidate lists? What is the typical length? What is the worst case? Figure 10 offers some insight for the design in Figure 9 using three letter keys (SPC = 1.834). The analysis uses the 9,022 unique words from the corpus noted earlier. Evidently, 55.7% of the words are at the front of the candidate list when word selection begins. This figure includes both unambiguous words (41.5%) and those ambiguous words that are the most frequent of the alternatives (14.2%). The pattern follows a well known relationship in linguistics known as Zipf's law, where a small number of frequently used language elements cover a high percentage of all elements in use (e.g., Wobbrock, Myers, & Chau, 2006; Zhai & Kristensson, 2003).

Figure 10. Percentage of dictionary words at each position in the candidate list. The analysis is for the design in Figure 9 with three letter keys using a 9022-word dictionary.

Considering that the three-letter-key design requires four scan steps for a single pass through the letter-selection region, the cost of having words in positions 1 through 4 in the candidate list is minor. As a cumulative figure, 82.2% of the words appear in the candidate list at position 4 or better. The same figures are 94.8% at position 10 and 99.6% at position 20. So, very long candidate lists are rare. But, still, they will occasionally occur. As an example of position 20, if a user wished to enter "Alas, I am dying beyond my means",5 after entering 1213 for "alas", the candidate list would be quite tedious: { does, body, goes, boat, dogs, diet, flat, coat, andy, gift, flew, ends, aids, alex, clay, bias, blew, gods, dies, alas }. This is an extreme example. In this case, the poor position of "alas" is compensated for, at least in part, by the good position of the other words: "I" (1), "am" (4), "dying" (6), "beyond" (2), "my" (4), "means" (1). The worst case for the test dictionary is "dine" (1221) at position 30. Matching more-probable candidates include "file", "gold", "cope", "golf", and so on.

2.5.2 Corpus Effect

It is worth considering the effect of the dictionary on the SPC calculation. If the dictionary had substantially more words or if it were derived from a different corpus, what is the impact on SPC? To investigate this, the SPC values in Figure 9 were re-calculated using two additional word-frequency lists. One was a much larger version of the British National Corpus containing 64,588 unique words (BNC-2). Another was the well-known Brown corpus of American English (Kucera & Francis, 1967) containing about 41,000 unique words from a sample of about one million words. The results are shown in Figure 11. For comparison, the top row (BNC-1) gives the SPC values from Figure 9.

| Corpus | Unique Words | Letter Keys | ||||

|---|---|---|---|---|---|---|

| 2 | 3 | 4 | 5 | 6 | ||

| BNC-1 | 9,025 | 1.927 | 1.834 | 2.066 | 2.299 | 2.551 |

| BNC-2 | 64,588 | 2.160 | 1.905 | 2.136 | 2.383 | 2.611 |

| Brown | 41,532 | 2.373 | 2.027 | 2.298 | 2.600 | 2.848 |

The most reassuring observation in Figure 11 is that substantially larger dictionaries do not cause an untoward degradation in performance. For the three-letter-key design, increasing the dictionary from 9,022 words (BNC-1) to 64,588 words (BNC-2) produced only a 3.9% increase in SPC, from SPC = 1.834 to SPC = 1.905. That the increase is small is likely because the additional words are, for the most part, larger and more obscure than the core 9,022 words. Ambiguity tends to arise with shorter words. Even though the Brown corpus has fewer words than BNC-2, there is evidently more ambiguity. For the three-letter-key design, the degradation compared to BNC-1 is 10.5%, from SPC = 1.834 to SPC = 2.027. The explanation here is simple. The letter-key assignment used in the calculation (see Figure 9) was based on optimization for the BNC-1 dictionary. If one wished to design a scanning ambiguous keyboard using a dictionary of words from the Brown corpus, or any other corpus or source, then the modeling process should use that dictionary.

Overall, the results above are promising. The optimal design for three letter keys (SPC < 2) suggests that English text can be entered in less than two scan steps per character on average. Of course, with SPS > 0.5, the cognitive or motor demand may pose a barrier to attaining the maximum possible text entry throughput. "Maximum possible" is the correct term here. Assuming users take all opportunities to optimize, they will indeed attain the maximum text entry rate. Of course, the actual rate depends on the scanning interval, which must be tailored to the abilities of the user. Just as an example, if the scanning interval was, say, 700 ms, the maximum text entry throughput (T) for the design in Figure 9 with three letter keys is given by Equation 1 as

| (6) |

This is an average rate for English, assuming the use of the BNC-1 dictionary. The rate for individual words or phrases may differ depending on their linguistic structure.

The text entry rate just cited (9.35 wpm) is quite good for one-key input with a scanning keyboard. Miró and Bernabeu (2008) cite a predicted text entry rate of 10.1 wpm with a one-key scanning keyboard using two-tier selection. However, their rate was computed using a scanning interval of 500 ms. Using a scanning interval of 500 ms in Equation 6 yields a predicted text entry rate of 13.1 wpm. Predictions are one thing; tests with users are quite another. Rates reported in the literature that were measured with users are lower (although they are difficult to assess and compare due to variation in the methodologies). Baljko and Tam (2006) researched text entry with a scanning keyboard with letter positions optimized using a Huffman coding tree. They reported entry rates ranging from 1.4 wpm to 3.1 wpm in a study with twelve able-bodied participants. The faster rate was achieved with a scanning interval of 750 ms. In Simpson and Horstmann's (1999) study with eight able-bodied participants, entry rates ranged from 3.0 wpm to 4.6 wpm using a scanning keyboard with an adaptive scanning interval. The faster rate was achieved with a scanning interval just under 600 ms.

It remains to be seen whether users can actually achieve the respectable entry rates conjectured for a scanning ambiguous keyboard of the type described here. In the next section, a "test of concept" evaluation is presented. For this, the three-letter-key design yielding the lowest SPC (see Figure 9) was chosen for evaluation.

3.1 Participants

Twelve able-bodied participants were recruited from the local university campus. The mean age was 25.3 years (SD = 3.2). Five were female, seven male. All participants were regular users of computers, reporting an average daily usage of 7.6 hours. Testing took approximately one hour, for which participants were paid ten dollars.

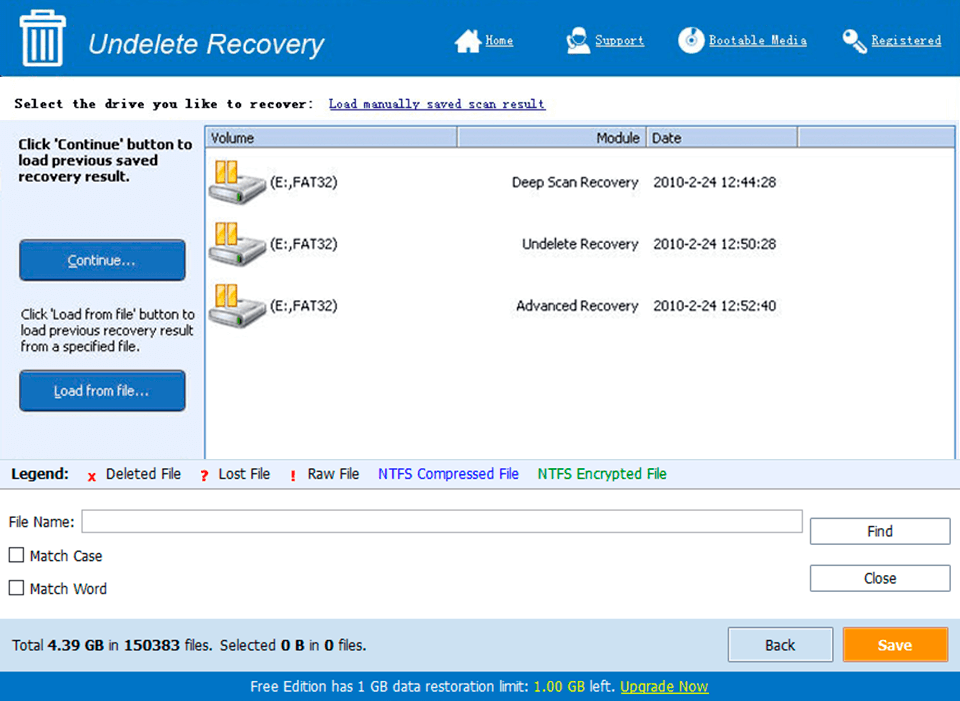

3.2 Apparatus

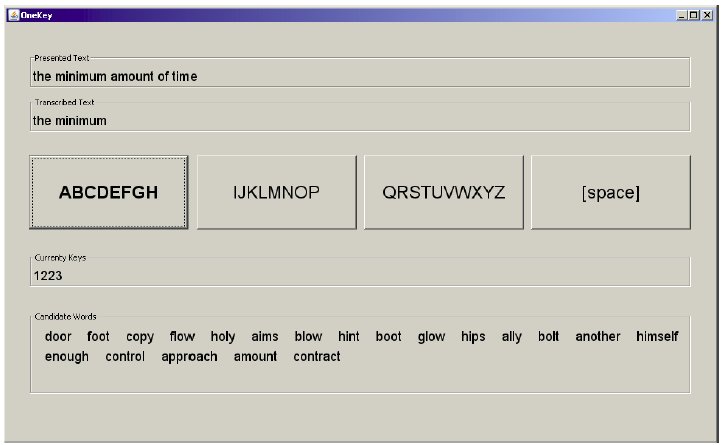

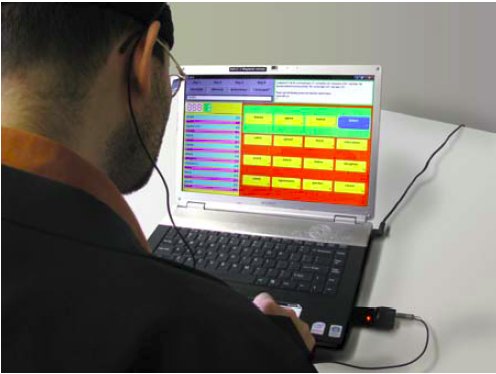

A prototype scanning ambiguous keyboard (SAK) was created in Java. The application included a letter-selection region and a word-selection region, as described earlier. For experimental testing, regions were also included to present text phrases for input and to show the transcribed text and keycodes during entry. Input was performed using any key on the system's keyboard.Several parameters configured the application upon launching, including the scanning interval, the letter-key assignment, a dictionary in the form of a word-frequency list, and the name of a file containing test phrases. A screen snap of the application, called OneKey, is shown in Figure 12.

Figure 12. Screen snap of the OneKey application. See text for discussion.

In the screen snap, focus is on the first letter key. Focus advances in the expected manner, according to the scanning interval. As selections are made, focus advances to the next key, rather than reverting to the first key (as noted earlier). In the image, the user has entered the first two words in the phrase "the minimum amount of time". The first four keys (1223) of the next word ("amount") have been entered. The candidate list shows words exactly matching the key sequence followed by extended matching words. The desired word is at position 18 in the list. The correct strategy here is to continue selecting letter keys to further spell out the word. When the user finishes selecting letter keys, a selection on space ("[space]") transfers focus to the word selection region. Words are highlighted in sequence by displaying them in blue with a focus border. When the desired word is highlighted, it is selected and added to the transcribed text.

Timing begins on the first key press for a phrase and ends when the last word in a phrase is selected. At the end of a phrase, a popup window shows summary statistics such as entry speed (wpm), error rate (%), and scanning efficiency (%). Pressing a key closes the popup window and brings up the next phrase for input.

3.2.1 Phrase Set

The outcome of text entry experiments may be affected by the text users enter. Depending on the entry method, text may be chosen specifically to elicit a favorable outcome. In the present experiment, for example, phrases could be concocted from words with low SPC values, thus creating an artificially inflated text entry throughput. This was not done. Instead, a generic set of 500 phrases was used (MacKenzie & Soukoreff, 2003). Some examples are given in Figure 13. For each trial, a phrase was selected at random and presented to the user for input.

The phrase set was designed to be representative of English. Phrase lengths ranged from 16 to 43 characters (mean = 28.6). In analyzing the phrases, it was determined that about 10% of the 1164 unique words were not in the dictionary. These words were added to the dictionary (with frequency = 1); thus, insuring that all phrases could be entered using the SAK under test.

To gain a sense of the linguistic structure of the phrases, a small utility program was written to compute SPC for every phrase. For the phrase set overall, SPC = 1.980, with a best case of SPC = 1.226 ("great disturbance in the force") and a worst case of SPC = 3.833 ("my bike has a flat tire"). Given that SPC = 1.834 for the SAK used in the experiment, the test phrases were, on average, slightly more difficult than English as represented in the embedded dictionary.

3.2.2 Error Correction

Error correction was implemented using a "long press" – pressing and holding the input key for two or more scan step intervals. While the input key was held, scanning was suspended. Scanning resumed when the key was released. If a long press occurred during entry of a word, the effect was to clear the current key sequence. If a long press occurred between words, the effect was to delete the last word in the transcribed text region.

3.3 Procedure

After signing an informed consent form, participants were told the general idea of text entry using scanning keyboards, ambiguous keyboards, and a scanning ambiguous keyboard. The text entry method was demonstrated using an initial scanning interval of 1100 ms. The operation of the letter-selection and word-selection regions was explained. Participants were allowed to enter a few practice phrases and ask questions while further instructions were given on the different ways to select letters and words (as per the OLPK, MLPK, DLPK, and OW methods discussed earlier) and the way to correct errors. They were told to position their hand comfortably in front of the keyboard and to make selections with any key. Selection using the index finger on the home row (F-key using the right hand) was recommended.

Participants were asked to study each phrase carefully – including the spelling of each word – before beginning entry of a phrase. They were reminded that timing did not begin until the first key press. Entry was to proceed as quickly and accurately as possible. They were encouraged to correct errors, if noticed, but were also told that perfect entry of each phrase was not a requirement.

At the end of the experiment, participants completed a brief questionnaire.

3.4 Design

The experiment included "block" as a within-subjects factor with five levels (1, 2, 3, 4, 5). Each block of input consisted of five phrases of text entry. The total amount of input was 12 participants × 5 blocks × 5 phrases/block = 300 phrases.

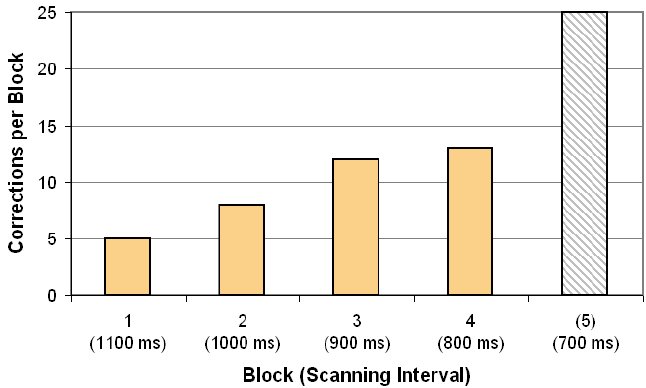

The scanning interval was set to 1100 ms for the first block of testing. It was decreased by 100 ms per block, with a final setting of 700 ms in block 5.

The dependent variables were text entry speed (wpm), scanning efficiency (%; Equation 3), error rate (%), and word corrections (the number of "long presses" per phrase).

4 RESULTS AND DISCUSSION

4.1 Text Entry Speed and Efficiency

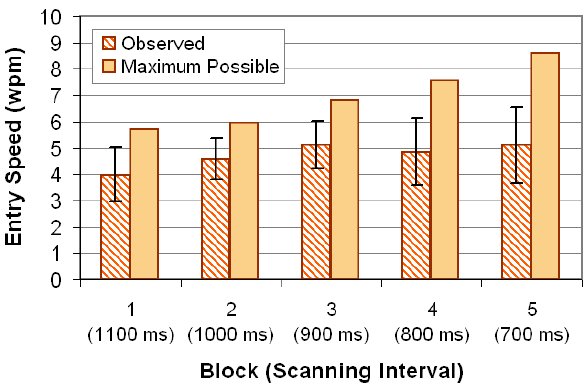

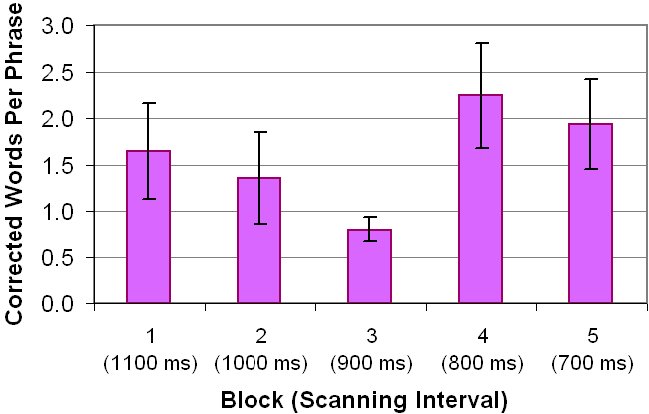

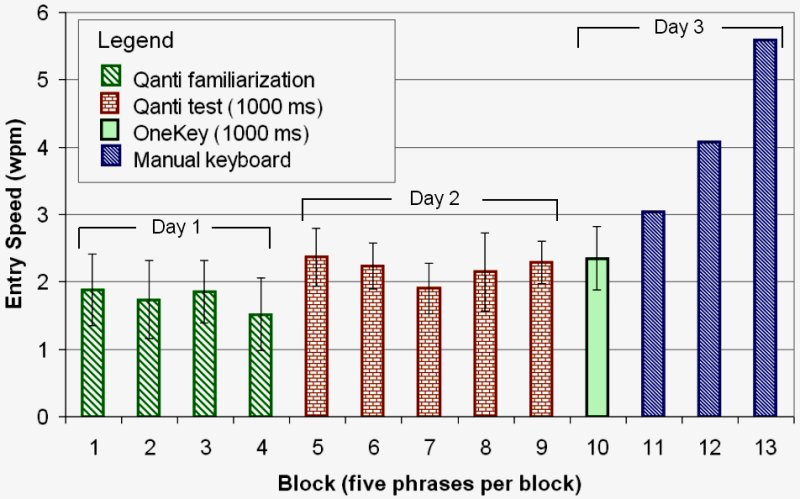

The grand mean for text entry speed was 4.73 wpm. There was a 28.3% improvement over the five blocks of input with observed rates of 3.98 wpm for the 1st block and 5.11 wpm for the 5th block. The trend is shown in Figure 14.

Figure 14. Observed entry speed (wpm) by block and scanning interval. For each block, the maximum possible entry speed and the scanning interval are also shown. Error bars show ±1 SD.

While the trend was statistically significant (F4,44 = 7.58, p < .0001), this must be considered in light of the confounding influence of scanning interval, which inherently increases the text entry rate, all else being equal. So, an increase in text entry speed was fully expected. In fact, the experiment, as a test of concept, was designed to ease participants into the operation of the SAK, and to gradually elicit an increase in text entry throughput by gradually decreasing the scanning interval.

Figure 14 also shows the maximum possible entry speed for each block. The value for each block was computed according to Equation 1 using the scanning interval and the average SPC for the 60 phrases randomly selected for that block (12 participants × 5 phrases/block). Each SPC used in the calculation was computed using the optimized word (OW) entry method. As noted earlier, this value is the minimum scan steps for which a phrase can be entered, assuming users take all opportunities to optimize. For the 5th block, the maximum possible speed was 8.60 wpm.

The pattern in Figure 14 suggests participants were not able to improve their text entry throughput after the 3rd block. This is particularly true if comparing the observed speed to the maximum possible speed. Admittedly, decreasing the scanning interval from block to block was a bit of a gamble: Would participants' emerging expertise, combined with a decreasing scanning interval, yield an increase in their text entry speed, proportional to the maximum possible rate? Clearly, the answer was "no". There are a number of possible reasons for this. One is simply that the experiment afforded too little time for participants to learn and develop entry strategies before reducing the scanning interval. Each block involved only about 8-10 minutes of text entry. Another possible reason is that the cognitive or motor demand was simply too high, when considering the need to make frequent selections with the three letter-key design under test.

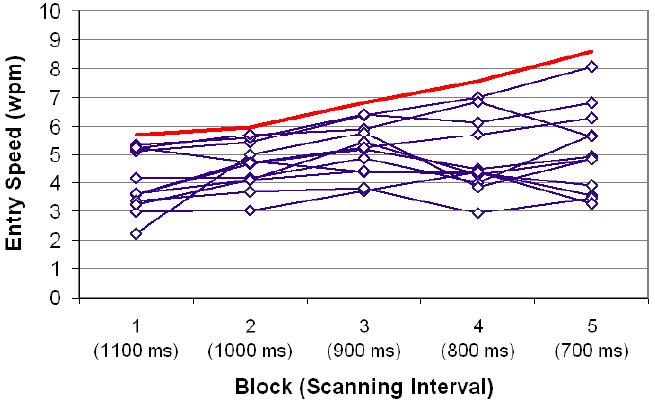

However, further insight lies in the large standard deviation bars for the 4th and 5th blocks in Figure 14. These suggest substantial individual differences in the responses across participants. Figure 15 shows this by plotting the trend for each participant over the five blocks. Evidently, some participants continued to improve in the 4th and 5th blocks. Three participants achieved mean text entry speeds above 6 wpm in the 5th block, with one achieving a mean of 8.05 wpm – very close to the maximum possible speed.

Figure 15. Per-participant text entry speed by block and scanning interval. The line at the top shows the maximum possible text entry speed.

While the inability of participants – overall or individually – to attain the maximum possible entry rate is worthy of analysis and speculation, the mean entry speed of 5.11 wpm in the 5th block is still quite good for one-key text entry. Of the studies noted earlier, the closest is Simpson and Koester's (1999) system with an adaptive scanning interval. Their maximum reported entry speed was 22.4 characters per minute, or 4.6 wpm. However, they excluded trials where participants missed selection opportunities. Furthermore, their methodology excluded error correction. By contrast, the figure reported here of 5.11 wpm includes all trials in the 5th block (12 participants × 5 phrases each). The measurements include the time lost through missed selections as well as the time in correcting errors. So, overall, the results reported here for a scanning ambiguous keyboard are very promising.

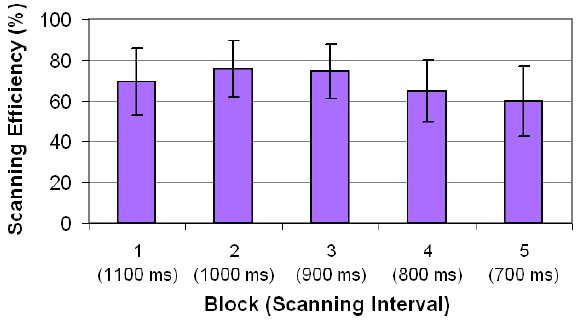

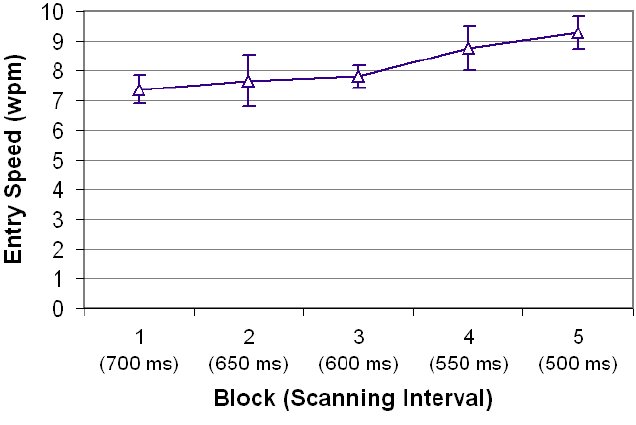

Participants with lower text entry speeds made more selection errors and had more difficulty seizing opportunities to optimize their entry, particularly with shorter scanning intervals. Figure 16 shows the overall trend of participants in terms of scanning efficiency – the ratio of the minimum number of scan steps to the observed number of scan steps (Equation 3).

Figure 16. Scanning efficiency by block and scanning interval. Scanning efficiency is the ratio of the minimum number of scan steps to the observed number of scan steps. The error bars show ±1 SD.