Borland Interbase 7.1 serial key or number

Borland Interbase 7.1 serial key or number

The Qt SQL module uses driver plugins to communicate with the different database APIs. Since Qt's SQL Module API is database-independent, all database-specific code is contained within these drivers. Several drivers are supplied with Qt, and other drivers can be added. The driver source code is supplied and can be used as a model for writing your own drivers.

Supported Databases

The table below lists the drivers included with Qt:

| Driver name | DBMS |

|---|---|

| QDB2 | IBM DB2 (version 7.1 and above) |

| QIBASE | Borland InterBase |

| QMYSQL / MARIADB | MySQL or MariaDB (version 5.0 and above) |

| QOCI | Oracle Call Interface Driver |

| QODBC | Open Database Connectivity (ODBC) - Microsoft SQL Server and other ODBC-compliant databases |

| QPSQL | PostgreSQL (versions 7.3 and above) |

| QSQLITE2 | SQLite version 2 Note: obsolete since Qt 5.14 |

| QSQLITE | SQLite version 3 |

| QTDS | Sybase Adaptive Server Note: obsolete since Qt 4.7 |

SQLite is the in-process database system with the best test coverage and support on all platforms. Oracle via OCI, PostgreSQL, and MySQL through either ODBC or a native driver are well-tested on Windows and Linux. The completeness of the support for other systems depends on the availability and quality of client libraries.

Note: To build a driver plugin you need to have the appropriate client library for your Database Management System (DBMS). This provides access to the API exposed by the DBMS, and is typically shipped with it. Most installation programs also allow you to install "development libraries", and these are what you need. These libraries are responsible for the low-level communication with the DBMS. Also make sure to install the correct database libraries for your Qt architecture (32 or 64 bit).

Note: When using Qt under Open Source terms but with a proprietary database, verify the client library's license compatibility with the LGPL.

Building the Drivers

The Qt script tries to automatically detect the available client libraries on your machine. Run to see what drivers can be built. You should get an output similar to this:

The script cannot detect the necessary libraries and include files if they are not in the standard paths, so it may be necessary to specify these paths using the , , or command-line options. For example, if your MySQL files are installed in (or in on Windows), then pass the following parameter to configure: (or for Windows). The particulars for each driver are explained below.

Note: If something goes wrong and you want qmake to recheck your available drivers, you must remove config.cache in <QTDIR>/qtbase/src/plugins/sqldrivers - otherwise qmake will not search for the available drivers again. If you encounter an error during the qmake stage, open config.log to see what went wrong.

A typical qmake run (in this case to configure for MySQL) looks like this:

Due to the practicalities of dealing with external dependencies, only the SQLite3 plugin is shipped with binary builds of Qt. To be able to add additional drivers to the Qt installation without re-building all of Qt, it is possible to configure and build the directory outside of a full Qt build directory. Note that it is not possible to configure each driver separately, only all of them at once. Drivers can be built separately, though. If the Qt build is configured with , it is necessary to install the plugins after building them, too. For example:

Driver Specifics

QMYSQL for MySQL or MariaDB 5 and higher

MariaDB is a fork of MySQL intended to remain free and open-source software under the GNU General Public License. MariaDB intended to maintain high compatibility with MySQL, ensuring a drop-in replacement capability with library binary parity and exact matching with MySQL APIs and commands. Therefore the plugin for MySQL and MariaDB are combined into one Qt plugin.

QMYSQL Stored Procedure Support

MySQL 5 has stored procedure support at the SQL level, but no API to control IN, OUT, and INOUT parameters. Therefore, parameters have to be set and read using SQL commands instead of QSqlQuery::bindValue().

Example stored procedure:

Source code to access the OUT values:

Note: and are variables local to the current connection and will not be affected by queries sent from another host or connection.

Embedded MySQL Server

The MySQL embedded server is a drop-in replacement for the normal client library. With the embedded MySQL server, a MySQL server is not required to use MySQL functionality.

To use the embedded MySQL server, simply link the Qt plugin to instead of . This can be done by adding to the configure command line.

Please refer to the MySQL documentation, chapter "libmysqld, the Embedded MySQL Server Library" for more information about the MySQL embedded server.

How to Build the QMYSQL Plugin on Unix and macOS

You need the MySQL / MariaDB header files, as well as the shared library / . Depending on your Linux distribution, you may need to install a package which is usually called "mysql-devel" or "mariadb-devel".

Tell qmake where to find the MySQL / MariaDB header files and shared libraries (here it is assumed that MySQL / MariaDB is installed in ) and run :

How to Build the QMYSQL Plugin on Windows

You need to get the MySQL installation files (e.g. mysql-installer-web-community-8.0.18.0.msi) or mariadb-connector-c-3.1.5-win64.msi. Run the installer, select custom installation and install the MySQL C Connector which matches your Qt installation (x86 or x64). After installation check that the needed files are there:

and for MariaDB

Note: As of MySQL 8.0.19, the C Connector is no longer offered as a standalone installable component. Instead, you can get and by installing the full MySQL Server (x64 only) or the MariaDB C Connector.

Build the plugin as follows (here it is assumed that is ):

If you are not using a Microsoft compiler, replace with above.

When you distribute your application, remember to include libmysql.dll / libmariadb.dll in your installation package. It must be placed in the same folder as the application executable. libmysql.dll additionally needs the MSVC runtime libraries which can be installed with vcredist.exe

QOCI for the Oracle Call Interface (OCI)

The Qt OCI plugin supports Oracle 9i, 10g and higher. After connecting to the Oracle server, the plugin will auto-detect the database version and enable features accordingly.

It's possible to connect to a Oracle database without a tnsnames.ora file. This requires that the database SID is passed to the driver as the database name, and that a hostname is given.

OCI User Authentication

The Qt OCI plugin supports authentication using external credentials (OCI_CRED_EXT). Usually, this means that the database server will use the user authentication provided by the operating system instead of its own authentication mechanism.

Leave the username and password empty when opening a connection with QSqlDatabase to use the external credentials authentication.

OCI BLOB/LOB Support

Binary Large Objects (BLOBs) can be read and written, but be aware that this process may require a lot of memory. You should use a forward only query to select LOB fields (see QSqlQuery::setForwardOnly()).

Inserting BLOBs should be done using either a prepared query where the BLOBs are bound to placeholders or QSqlTableModel, which uses a prepared query to do this internally.

How to Build the OCI Plugin on Unix and macOS

For Oracle 10g, all you need is the "Instant Client Package - Basic" and "Instant Client Package - SDK". For Oracle prior to 10g, you require the standard Oracle client and the SDK packages.

Oracle library files required to build the driver:

- (all versions)

- (only Oracle 9)

Tell where to find the Oracle header files and shared libraries and run make:

For Oracle version 9:

For Oracle version 10, we assume that you installed the RPM packages of the Instant Client Package SDK (you need to adjust the version number accordingly):

Note: If you are using the Oracle Instant Client package, you will need to set LD_LIBRARY_PATH when building the OCI SQL plugin, and when running an application that uses the OCI SQL plugin. You can avoid this requirement by setting RPATH, and listing all of the libraries to link to. Here is an example:

If you wish to build the OCI plugin manually with this method, the procedure looks like this:

How to Build the OCI Plugin on Windows

Choosing the option "Programmer" in the Oracle Client Installer from the Oracle Client Installation CD is generally sufficient to build the plugin. For some versions of Oracle Client, you may also need to select the "Call Interface (OCI)" option if it is available.

Build the plugin as follows (here it is assumed that Oracle Client is installed in ):

If you are not using a Microsoft compiler, replace with in the line above.

When you run your application, you will also need to add the path to your environment variable:

QODBC for Open Database Connectivity (ODBC)

ODBC is a general interface that allows you to connect to multiple DBMSs using a common interface. The QODBC driver allows you to connect to an ODBC driver manager and access the available data sources. Note that you also need to install and configure ODBC drivers for the ODBC driver manager that is installed on your system. The QODBC plugin then allows you to use these data sources in your Qt applications.

Note: You should use the native driver, if it is available, instead of the ODBC driver. ODBC support can be used as a fallback for compliant databases if no native driver is available.

On Windows, an ODBC driver manager should be installed by default. For Unix systems, there are some implementations which must be installed first. Note that every end user of your application is required to have an ODBC driver manager installed, otherwise the QODBC plugin will not work.

When connecting to an ODBC datasource, you should pass the name of the ODBC datasource to the QSqlDatabase::setDatabaseName() function, rather than the actual database name.

The QODBC Plugin needs an ODBC compliant driver manager version 2.0 or later. Some ODBC drivers claim to be version-2.0-compliant, but do not offer all the necessary functionality. The QODBC plugin therefore checks whether the data source can be used after a connection has been established, and refuses to work if the check fails. If you do not like this behavior, you can remove the line from the file . Do this at your own risk!

By default, Qt instructs the ODBC driver to behave as an ODBC 2.x driver. However, for some driver-manager/ODBC 3.x-driver combinations (e.g., unixODBC/MaxDB ODBC), telling the ODBC driver to behave as a 2.x driver can cause the driver plugin to have unexpected behavior. To avoid this problem, instruct the ODBC driver to behave as a 3.x driver by setting the connect option before you open your database connection. Note that this will affect multiple aspects of ODBC driver behavior, e.g., the SQLSTATEs. Before setting this connect option, consult your ODBC documentation about behavior differences you can expect.

When using the SAP HANA database, the connection has to be established using the option "SCROLLABLERESULT=TRUE", as the HANA ODBC driver does not provide scrollable results by default, e.g.:

If you experience very slow access of the ODBC datasource, make sure that ODBC call tracing is turned off in the ODBC datasource manager.

Some drivers do not support scrollable cursors. In that case, only queries in forwardOnly mode can be used successfully.

ODBC Stored Procedure Support

With Microsoft SQL Server the result set returned by a stored procedure that uses the return statement, or returns multiple result sets, will be accessible only if you set the query's forward only mode to forward using QSqlQuery::setForwardOnly().

Note: The value returned by the stored procedure's return statement is discarded.

ODBC Unicode Support

The QODBC Plugin will use the Unicode API if UNICODE is defined. On Windows NT based systems, this is the default. Note that the ODBC driver and the DBMS must also support Unicode.

For the Oracle 9 ODBC driver (Windows), it is necessary to check "SQL_WCHAR support" in the ODBC driver manager otherwise Oracle will convert all Unicode strings to local 8-bit.

How to Build the ODBC Plugin on Unix and macOS

It is recommended that you use unixODBC. You can find the latest version and ODBC drivers at http://www.unixodbc.org. You need the unixODBC header files and shared libraries.

Tell where to find the unixODBC header files and shared libraries (here it is assumed that unixODBC is installed in ) and run :

How to Build the ODBC Plugin on Windows

The ODBC header and include files should already be installed in the right directories. You just have to build the plugin as follows:

If you are not using a Microsoft compiler, replace with in the line above.

QPSQL for PostgreSQL (Version 7.3 and Above)

The QPSQL driver supports version 7.3 and higher of the PostgreSQL server.

For more information about PostgreSQL visit http://www.postgresql.org.

QPSQL Unicode Support

The QPSQL driver automatically detects whether the PostgreSQL database you are connecting to supports Unicode or not. Unicode is automatically used if the server supports it. Note that the driver only supports the UTF-8 encoding. If your database uses any other encoding, the server must be compiled with Unicode conversion support.

Unicode support was introduced in PostgreSQL version 7.1 and it will only work if both the server and the client library have been compiled with multibyte support. More information about how to set up a multibyte enabled PostgreSQL server can be found in the PostgreSQL Administrator Guide, Chapter 5.

QPSQL Case Sensitivity

PostgreSQL databases will only respect case sensitivity if the table or field name is quoted when the table is created. So for example, a SQL query such as:

will ensure that it can be accessed with the same case that was used. If the table or field name is not quoted when created, the actual table name or field name will be lower-case. When QSqlDatabase::record() or QSqlDatabase::primaryIndex() access a table or field that was unquoted when created, the name passed to the function must be lower-case to ensure it is found. For example:

QPSQL Forward-only query support

To use forward-only queries, you must build the QPSQL plugin with PostreSQL client library version 9.2 or later. If the plugin is built with an older version, then forward-only mode will not be available - calling QSqlQuery::setForwardOnly() with will have no effect.

Warning: If you build the QPSQL plugin with PostgreSQL version 9.2 or later, then you must distribute your application with libpq version 9.2 or later. Otherwise, loading the QPSQL plugin will fail with the following message:

While navigating the results in forward-only mode, the handle of QSqlResult may change. Applications that use the low-level handle of SQL result must get a new handle after each call to any of QSqlResult fetch functions. Example:

While reading the results of a forward-only query with PostgreSQL, the database connection cannot be used to execute other queries. This is a limitation of libpq library. Example:

This problem will not occur if query1 and query2 use different database connections, or if we execute query2 after the while loop.

Note: Some methods of QSqlDatabase like tables(), primaryIndex() implicity execute SQL queries, so these also cannot be used while navigating the results of forward-only query.

Note: QPSQL will print the following warning if it detects a loss of query results:

How to Build the QPSQL Plugin on Unix and macOS

You need the PostgreSQL client library and headers installed.

To make find the PostgreSQL header files and shared libraries, run the following way (assuming that the PostgreSQL client is installed in ):

How to Build the QPSQL Plugin on Windows

Install the appropriate PostgreSQL developer libraries for your compiler. Assuming that PostgreSQL was installed in , build the plugin as follows:

Users of MinGW may wish to consult the following online document: PostgreSQL MinGW/Native Windows.

When you distribute your application, remember to include libpq.dll in your installation package. It must be placed in the same folder as the application executable.

QTDS for Sybase Adaptive Server

Note: TDS is no longer used by MS Sql Server, and is superseded by ODBC. QTDS is obsolete from Qt 4.7.

It is not possible to set the port with QSqlDatabase::setPort() due to limitations in the Sybase client library. Refer to the Sybase documentation for information on how to set up a Sybase client configuration file to enable connections to databases on non-default ports.

How to Build the QTDS Plugin on Unix and macOS

Under Unix, two libraries are available which support the TDS protocol:

Regardless of which library you use, the shared object file is needed. Set the environment variable to point to the directory where you installed the client library and execute :

How to Build the QDTS Plugin on Windows

You can either use the DB-Library supplied by Microsoft or the Sybase Open Client (https://support.sap.com). Configure will try to find NTWDBLIB.LIB to build the plugin:

By default, the Microsoft library is used on Windows. If you want to force the use of the Sybase Open Client, you must define in .

If you are not using a Microsoft compiler, replace with in the line above.

QDB2 for IBM DB2 (Version 7.1 and Above)

The Qt DB2 plugin makes it possible to access IBM DB2 databases. It has been tested with IBM DB2 v7.1 and 7.2. You must install the IBM DB2 development client library, which contains the header and library files necessary for compiling the QDB2 plugin.

The QDB2 driver supports prepared queries, reading/writing of Unicode strings and reading/writing of BLOBs.

We suggest using a forward-only query when calling stored procedures in DB2 (see QSqlQuery::setForwardOnly()).

How to Build the QDB2 Plugin on Unix and macOS

How to Build the QDB2 Plugin on Windows

The DB2 header and include files should already be installed in the right directories. You just have to build the plugin as follows:

If you are not using a Microsoft compiler, replace with in the line above.

QSQLITE2 for SQLite Version 2

The Qt SQLite 2 plugin is offered for compatibility. Whenever possible, use the version 3 plugin instead. The build instructions for version 3 apply to version 2 as well.

QSQLITE for SQLite (Version 3 and Above)

The Qt SQLite plugin makes it possible to access SQLite databases. SQLite is an in-process database, which means that it is not necessary to have a database server. SQLite operates on a single file, which must be set as the database name when opening a connection. If the file does not exist, SQLite will try to create it. SQLite also supports in-memory and temporary databases. Simply pass respectively ":memory:" or an empty string as the database name.

SQLite has some restrictions regarding multiple users and multiple transactions. If you try to read/write on a resource from different transactions, your application might freeze until one transaction commits or rolls back. The Qt SQLite driver will retry to write to a locked resource until it runs into a timeout (see at QSqlDatabase::setConnectOptions()).

In SQLite any column, with the exception of an INTEGER PRIMARY KEY column, may be used to store any type of value. For instance, a column declared as INTEGER may contain an integer value in one row and a text value in the next. This is due to SQLite associating the type of a value with the value itself rather than with the column it is stored in. A consequence of this is that the type returned by QSqlField::type() only indicates the field's recommended type. No assumption of the actual type should be made from this and the type of the individual values should be checked.

The driver is locked for updates while a select is executed. This may cause problems when using QSqlTableModel because Qt's item views fetch data as needed (with QSqlQuery::fetchMore() in the case of QSqlTableModel).

You can find information about SQLite on http://www.sqlite.org.

How to Build the QSQLITE Plugin

SQLite version 3 is included as a third-party library within Qt. It can be built by passing the parameter to the configure script.

If you do not want to use the SQLite library included with Qt, you can pass to the configure script to use the SQLite libraries of the operating system. This is recommended whenever possible, as it reduces the installation size and removes one component for which you need to track security advisories.

On Unix and macOS (replace with the directory where SQLite resides):

On Windows:

Enable REGEXP operator

SQLite comes with a REGEXP operation. However the needed implementation must be provided by the user. For convenience a default implementation can be enabled by setting the connect option before the database connection is opened. Then a SQL statement like "column REGEXP 'pattern'" basically expands to the Qt code

For better performance the regular expressions are cached internally. By default the cache size is 25, but it can be changed through the option's value. For example passing "" reduces the cache size to 10.

QSQLITE File Format Compatibility

SQLite minor releases sometimes break file format forward compatibility. For example, SQLite 3.3 can read database files created with SQLite 3.2, but databases created with SQLite 3.3 cannot be read by SQLite 3.2. Please refer to the SQLite documentation and change logs for information about file format compatibility between versions.

Qt minor releases usually follow the SQLite minor releases, while Qt patch releases follow SQLite patch releases. Patch releases are therefore both backward and forward compatible.

To force SQLite to use a specific file format, it is necessary to build and ship your own database plugin with your own SQLite library as illustrated above. Some versions of SQLite can be forced to write a specific file format by setting the define when building SQLite.

QIBASE for Borland InterBase

The Qt InterBase plugin makes it possible to access the InterBase and Firebird databases. InterBase can either be used as a client/server or without a server in which case it operates on local files. The database file must exist before a connection can be established. Firebird must be used with a server configuration.

Note that InterBase requires you to specify the full path to the database file, no matter whether it is stored locally or on another server.

You need the InterBase/Firebird development headers and libraries to build this plugin.

Due to license incompatibilities with the GPL, users of the Qt Open Source Edition are not allowed to link this plugin to the commercial editions of InterBase. Please use Firebird or the free edition of InterBase.

QIBASE Unicode Support and Text Encoding

By default the driver connects to the database using UNICODE_FSS. This can be overridden by setting the ISC_DPB_LC_CTYPE parameter with QSqlDatabase::setConnectOptions() before opening the connection.

If Qt does not support the given text encoding the driver will issue a warning message and connect to the database using UNICODE_FSS.

Note that if the text encoding set when connecting to the database is not the same as in the database, problems with transliteration might arise.

QIBASE Stored procedures

InterBase/Firebird return OUT values as result set, so when calling stored procedure, only IN values need to be bound via QSqlQuery::bindValue(). The RETURN/OUT values can be retrieved via QSqlQuery::value(). Example:

How to Build the QIBASE Plugin on Unix and macOS

The following assumes InterBase or Firebird is installed in :

If you are using InterBase:

If you are using Firebird, the Firebird library has to be set explicitly:

How to Build the QIBASE Plugin on Windows

The following assumes InterBase or Firebird is installed in :

If you are using InterBase:

If you are using Firebird, the Firebird library has to be set explicitly:

If you are not using a Microsoft compiler, replace with in the line above.

Note that must be in the .

Troubleshooting

You should always use client libraries that have been compiled with the same compiler as you are using for your project. If you cannot get a source distibution to compile the client libraries yourself, you must make sure that the pre-compiled library is compatible with your compiler, otherwise you will get a lot of "undefined symbols" errors. Some compilers have tools to convert libraries, e.g. Borland ships the tool to convert libraries that have been generated with Microsoft Visual C++.

If the compilation of a plugin succeeds but it cannot be loaded, make sure that the following requirements are met:

- Ensure that the plugin is in the correct directory. You can use QApplication::libraryPaths() to determine where Qt looks for plugins.

- Ensure that the client libraries of the DBMS are available on the system. On Unix, run the command and pass the name of the plugin as parameter, for example . You will get a warning if any of the client libraries could not be found. On Windows, you can use Visual Studio's dependency walker. With Qt Creator, you can update the environment variable in the Run section of the Project panel to include the path to the folder containing the client libraries.

- Compile Qt with defined to get very verbose debug output when loading plugins.

Make sure you have followed the guide to Deploying Plugins.

How to Write Your Own Database Driver

QSqlDatabase is responsible for loading and managing database driver plugins. When a database is added (see QSqlDatabase::addDatabase()), the appropriate driver plugin is loaded (using QSqlDriverPlugin). QSqlDatabase relies on the driver plugin to provide interfaces for QSqlDriver and QSqlResult.

QSqlDriver is an abstract base class which defines the functionality of a SQL database driver. This includes functions such as QSqlDriver::open() and QSqlDriver::close(). QSqlDriver is responsible for connecting to a database, establish the proper environment, etc. In addition, QSqlDriver can create QSqlQuery objects appropriate for the particular database API. QSqlDatabase forwards many of its function calls directly to QSqlDriver which provides the concrete implementation.

QSqlResult is an abstract base class which defines the functionality of a SQL database query. This includes statements such as , , and . QSqlResult contains functions such as QSqlResult::next() and QSqlResult::value(). QSqlResult is responsible for sending queries to the database, returning result data, etc. QSqlQuery forwards many of its function calls directly to QSqlResult which provides the concrete implementation.

QSqlDriver and QSqlResult are closely connected. When implementing a Qt SQL driver, both of these classes must to be subclassed and the abstract virtual methods in each class must be implemented.

To implement a Qt SQL driver as a plugin (so that it is recognized and loaded by the Qt library at runtime), the driver must use the Q_PLUGIN_METADATA() macro. Read How to Create Qt Plugins for more information on this. You can also check out how this is done in the SQL plugins that are provided with Qt in .

The following code can be used as a skeleton for a SQL driver:

Articles

InterBase and Firebird Recovery Guide

NOTICE: This document is the chapter from the book "The InterBase World" which was written by Alexey Kovyazin and Serg Vostrikov.

The chapter from book "The InterBase World" devoted to the database repairing.1. The history of this guide

The Russian book "The InterBase World" was published in September, 2002.Its pressrun was 3000 copies. After 3 months it was sold out and second, improved edition was published in April, 2003 with pressrun 5000 copies.

Now it is on top in the largest Russian online-bookstores and we intend that it will sold out very soon.

The authors of the book are Alexey Kovyazin, developer of IBSurgeon and

well-known Russian InterBase specialist, and Serg Vostrikov, CEO of Devrace

company www.devrace.com

It’s a funny thing, not a single book devoted to InterBase was published in English!

Thousands and thousands of developers use InterBase and Firebird, discuss the

topic in various conferences (take a look here: Links).

The community of Interbase developers averages out to tens of thousands of

people. The strong demand on the InterBase books in various countries proves the InterBase community is really big.

We can stake the case of beer on the fact that the edition of 10000 copies will be swept away from Amazon.com in a month. But people in publising companies

"knows everything" and they are sure that nobody buy book about InterBase. Its areal pity.

Here we'd like to offer you the draft of one chapter of this book devoted to recovery of InterBase/Firebird databases.

2. How to recover InterBase/Firebird database

2.1. Review of main causes of database corruptionUnfortunately there is always a probability that any information store will be

corrupted and some information from it will be lost. Database is not an exception to this rule. In this chapter we will consider the principal causes that lead to InterBase database corruption, some methods of repairing databases and extracting information from them. Also we will get to know the recommendations and precautions that will minimize a probability of information loss from database.

First of all, if we speak about database repairing we should clarify a notion of

“database corruption”. Database is usually called damaged if trying to extract or modify some information errors appear and /or the extracting information turns out to be lost, incomplete or not right at all. There are cases when database corruptions are hidden and are found only by testing with special facilities, but there are also real database corruptions when it is impossible to be connected to the database, when adjusted programs-clients show strange errors (when no manipulations were executed with database), or when it is impossible to restore the database from backup copy.

2.2. Principal causes of database corruption are:

- Abnormal termination of server computer, especially electric power interruption.For IT- industry it is a real lash and that is why we hope there is no need to remind you once again about the necessity of having a source of uninterrupted power supply on server.

- Defects and faults of server computer, especially HDD (hard disk drive), disk controllers, main memory of the computer and cache memory of Raid controllers.

- Not a proper connection string with a multi-client database of one or more users (in versions prior to 6.x ) When connecting via TCP/IP, the path to database must be pointed server name: drive:/path/databasename /for servers on UNIX platform servername: /path/databasename /, according to NETBEUI protocol \\servername\drive:\path\databasename. Even when connecting database from the computer, on which database is located and server is running one should use the same line renaming servername for localhost. One cannot use mapped drive in the connection line. If you break one of these rules, server considers that it works with different databases and database corruption is guaranteed.

- File copy or other file access to database when server is running. The execution of the command “shut-down” or disconnecting the users in usual way is not a guarantee that server is doing nothing with the database, if sweep interval is not set to “0”, garbage collection can be executed. Generally the garbage collection is executed immediatelly after the last user disconnects from database. Usually it takes several seconds, but if before it many DELETE or UPDATE operations were committed, a process may be longer.

- Using unstable InterBase server versions 5.1-.5.5.Borland Company officially admitted that there were several errors in these servers and a steady upgrate 5.6 removed only after an output of certified InterBase 6 was in free-running mode for all clients of servers 5.1-5.5 on its site.

- Exceeding size restriction of database file (not a database!). For pre-InterRase 6 versions and some InterBase 6 beta database file limit is 4Gb, for InterBase 6.5 and all releases of Firebird (1.0, 1.5, 2.0, 2.1) - 32Tb. When approaching database size to a limit value, an additional file must be created.

- Exhaustion of free disk space when working with database.

- For Borland InterBase servers versions under 6.0.1.6 - exceeding of restriction to a number of generators according to Borland InterBase R & D defined in thefollowing way (look table 1).

Version Page size=1024 Page size=2048 Page size=4096 Page size=8192 Pre 6 248 504 1016 2040 6.0.x 124 257 508 102

• For all Borland InterBase servers – exceeding of permissible number of

transactions without executing backup/restore. One can know the number of

transactions happened in database from the time of last creation by invoking the utility gstat with a key – h- parameter NEXT TRANSACTION ID will be desired

quantity of transactions. According to Ann W.Harrison the critical number of

transactions depends on page size, and has the following values (look table 2):

| Database page size | Critical number of transactions |

| 1024 byte | 131 596 287 |

| 2048 byte | 265 814 016 |

| 4096 byte | 534 249 472 |

| 8192 byte | 1 071 120 384 |

Table 2: Critical number of transactions in Borland InterBase servers

Constraints of Borland InterBase servers enumerated above are not applied to

Firebird servers except for the earliest versions 0.x., existence of which has already become a history. If you use the final version Firebird 1.0 or InterBase 6.5-7.x, you should not be worried about points 5, 6, 8 and 9 and you should concentate your efforts on other causes. Now we will consider the most frequent of them in detail.

2.3. Power supply failure

When shutting-off the power on server, all the activities of data processing are

interrupted in the most unexpected and (according to Murphy’s law) dangerous

places. As a result of it the information in database may be distorted or lost. The simplest case is when all uncommitted data from client’s applications were lost as a result of emergency server shutdown. After power-fail restart server analyzes the data, notices incomplete transactions related to none of the clients and cancel all the modifications made within the bounds of these «dead» transactions. Actually such behavior is normal and supposing from the beginning by InterBase developers.

However power supply interruption in not always followed by such insignificant

losses only. If server was executing database extension at the moment of power supply interruption, there is a big probability of having orphan pages in database file (pages that are physically allocated and registered on page inventory page (PIP) , data writing on which is impossible). If you want to know more about orphan pages look chapter «The structure of InterBase database».

Only the tool of repairing and modification gfix (we will consider it below) is able to fight with orphan pages in database file. Actually orphan pages lead to unnecessary expense of disk space and as such are not the cause of data loss or corruption.

Power loss leads to more serious damages. For example, after shutting off the

power and restarting a great amount of data, including committed, may be lost (after adding or modification of which a command «commit transaction» was executed). It happens because confirmed data are not written right to database file on disk. And file cache of operating system (OS) is used for this aim. Server process gave data writing command to OS. Then OS assured server that all the data were saved on disk and in reality data were stored in file cache. OS doesn’t hurry to flush these data to disk, because it considers that there is much main memory left and puts off slow operations of writing to disk until main memory is filled.

2.4. Forced writes – cuts both ways

In order to affect the situation tuning of data write mode is provided in InterBase 6. This parameter is called forced writes (FW) and has 2 modes – ON (synchronous) and OFF (asynchronous). FW modes define how InterBase communicates with disk. If FW is turned on, the setting of synchronous writes to disk is switched on, when confirmed data are being written to disk just after command commit, server is waiting for writing completion and only then continues processing. If FW is turned off InterBase doesn’t hurry to write data to disk after the command of transaction commit and delegates this task to parallel thread while main thread continues data processing not waiting until writes are done to disk. Synchronous writes mode is one of the most careful and it minimizes any possible data loss, however it may cause some loss of performance. Asynchronous writes mode increases a probability of loss of great number of data. In order to achieve maximum performance FW Off mode is usually set. But as a result of power interruption much more number of data is lost during the asynchronous writes than synchronous. When setting the write mode you should decide whether a few percents of performance are more significant than a few hours of work if power interruption happens unexpectedly.

Very often users are careless to InterBase. Small organizations save on any trifle, often on computer-server where DBMS server and different server programs (and not only server) are set as well. If they hang-up people thinking for not a long time press RESET (it happens several times a day). Although InterBase is very steady to such activities comparing with other DBMS and allows to start working with database just after emergency reboot, but such use isn’t desired. The number of orphan pages increases and data lose connections among themselves as a result of fault reboots.

It may continue for a long time, but sooner or later it will come to an end. When damaged pages appear among PIP or generators pages or if database header page is corrupted, database may never open again and become a big piece of separate data from which one can’t extract a single byte of useful information.

2.5. Corruption of hard disk

Hard disk corruptions lead to missing of database important system pages and/or corruption of links among the remained pages. Such corruptions are one of the most difficult cases, because almost always they require low-level interference to restore the database.

2.6. Mistakes of database design

It’s necessary that you know about some mistakes made by database developers

that can lead to impossibility of database recovery from a backup copy (*.gbk files created by gbak program). First of all this is a careless use of constraints on database level. Typical example is constraints NOT NULL. Let’s suppose that we have a table filled with the number of records. Now we’ll add to this table using ALTER TABLE command one more column and point that it mustn’t contain non-defined values NULL. Something like this:

ALTER TABLE sometable Field/INTEGER NOT NULL

And in this case there will be no server’s error as it could be expected. This

metadata modification will be committed and we won’t receive any error or warning message that creates an illusion of normality of this situation.

However, if we backup database and try to restore it from a backup copy, we’ll

receive an error message at the phase of restoring (because Nulls are inserted into the column that has NOT NULL constraint, and the process of restoring will be interrupted. (The important note provided by Craig Stuntz - with version InterBase 7.1 constraints are ignored by default during restore (this can be controlled by a command-line switch) and nearly any non-corrupt backup can be restored. It's always a good idea to do a test restore after making a backup, but this problem should pretty much go away in version 7.1. ) This backup copy can’t be restored. If restoring was directed to file having the same name as the existing database (duringrestoring existing database working file was being rewritten) we’ll lose the whole information.

It is connected with the fact that constraints NOT NULL are implemented by system triggers that check only arriving data. During restoring the data from backup copy are inserted into the empty just created tables - here we can find inadmissible NULLs in the column with constraint NOT NULL.

Some developers consider that such InterBase behavior to be incorrect, but other one will be unable to add a field with NOT NULL restriction to the database table.

A question about required value by default and filling with it at the moment of

creation was widely discussed by Firebird architects, but wasn’t accepted because of the fact that programmer is obviously going to fill it according to the algorithm, rather complicated and maybe iterative. But there is no guarantee, whether he’ll be able to distinguish the records ignored by previous iteration from unfilled records or not.

The similar problem can be caused by garbage collection fault because of setting not a correct path to database (the cause of corruption 3) at the time of connection and file access to database files when server is working with it (the cause of corruption 4) and records whole filled with Null can appear in some tables. It’s very difficult to detect these records, because they don’t correspond to integrity control restrictions, and operator Select just doesn’t see them, although they get into backup copy. If it is impossible to restore for this reason, one should run gfix program (look below), find and delete these records using non-indexed fields as search conditions, after it retry to make a backup copy and restore database from it. In conclusion we can say that there is great number of causes of database corruption and you should always be ready for worst - that your database will be damaged for that or other reason. You also must be ready to restore and save valuable information. And now we’ll consider precautions that guarantee InterBase database security, as well as methods of repairing damaged databases.

2.7. Precautions of InterBase database corruption

In order to prevent database corruption, one should always create backup copies (if you want to know more about backup then look the chapter “Backup and restore”). It’s the most trusted way against database corruption. Only backup gives 100% guarantee of database security. As it’s described above, as the result of backup we can get a useless copy (a copy that can’t be restored), that’s why restoring a base from the copy mustn’t be performed by writing over the script and backup must be done according to definite rules. Firstly, backup must be executed as more often as possible, secondly it must be serial and thirdly, backup copies must be checked for restoring capability.

Often backup means that it’s necessary to make a backup copy rather often, for example once in twenty-four hours. The less data period is between the database backup, the fewer data will be lost as a result of fault. Sequence of backup means that the number of backups must increase and must be stored at least for a week. If there is a possibility, it’s necessary to write backups to special devices like streamer, but if there is not – just copy them to the other computer. The history of backup copies will help to discover hidden corruptions and cope with the error that arose long ago and showed up unexpectedly. One has to check, whether it is possible to restore the received backup without errors or not. It can be checked only in one way - through the test restore process. It should be said that restore process takes 3 times more time than backup, and it’s difficult to execute restore validation every dayfor large databases, because it may interrupt the users’ work for a few ho urs (nightbreak may not be enough).

It wou ld be better if big organizations didn’t save on “matches” and left one computer for these aims.

In this case, if server must work with serious load 24 hours 7 days a week, we canuse SHADOW mechanism for taking snapshots from database and further backup operations from the immediate copy. Backup process and database restoring is described in detail in chapter “Backup and restore”. When creatinga backup and then restoring database from it, recreation of all data in database is happening. This process (backup/restore or b/r) contributes to the correction of most non-fatal errorsin database, connected with hard disk corruptions, detecting problems with integrityin database, cleaning database from garbage (old versions and fragments of records, incomplete transactions), decreasing database size considerably.

Regular b/r is a guarantee of InterBase database security. If database is working, then it is recommended to execute b/r every week. To tell the truth, there are some illustrations about InterBase databases that are intensively used for same years without backup/restore.

Nevertheless, to be on a safe side it’s desirable to perform this procedure, especially as it can be easily automated (look chapter “Backup”)

If it’s impossible to perform backup/restore often for some reasons, then one can use the tool gfix for checking and restoring database. gfix allows to check and remove many errors without b/r.

2.8. Command line tool gfix

Command line tool gfix is used for checking and restoring database. Besides, gfix can also execute various activities of database control: changing database dialect, setting and canceling the mode “read-only”, setting cache size for a concrete database and also some important functions (you can know about them in InterBase 6 Operations Guide [4]) gfix is committed in a command line mode and has the following syntax:

Gfix [ options] db name

Options – is a set of options for executing gfix , db name is a name of database over which operations will be performed, defined by set of options. Table 3 represents options gfix related to database repairing:

| Option | Description |

| –f[ull] | This option is used in combination with –v and means it’s time to check all fragments of records |

| –i[gnore] | Option makes gfix ignore checksums errors at the time of validation or database cleaning |

| –m[end] | Marks damaged records as not available, as a result of what they will be deleted during the following backup/restore. Option is used at the time of preparing corrupted database to b/r. |

| –n[o_update] | Option is used in combination with –v for read-only database validation without correcting corruptions |

| –pas[sword] | Option allows to set the password when connecting database. (Note that it is the error in InterBase documentation -pa[ssword], but the shortcut "-pa" will not work - use "-pas" ) |

| –user | Option allows to set user’s name connecting database |

| –v[alidate] | Option presetting database validation in the way of which errors are discovered |

| -m[ode] | Option setting the write mode for database – for read only or read/write. This parameter can accept 2 values – read write or read only. |

| –w[rite] {sync | async} | Option that turns on and off the mode synchronous/asynchronous forced writes to database. sync – to turn synchronous writes on (FW ON); async –to turn asynchronous writes on (FW OFF); |

There are some typical examples of using gfix:

gfix –w sync –user SYSDBA –pass masterkey firstbase.gdb

In this example we set for our test database firstbase.gdb synchronous writes mode (FW ON). (Of course, it is useful before the corruption occurs). And below is the first command that you should use to check database after the corruption occurs:

gfix –v –full –user SYSDBA –pass masterkey firstbase.gdb

In this example we start checking our test database (option –v) and indicate that fragments of records must be checked as well (option -full). Of course, it is more convenient to set various options for checking and restoring process by any GUI, but we’ll consider the functions of database recovery using command line tools. These tools are included to InterBase and you can be sure that their behavior will be the same on all OS running InterBase. It is very important that they always be near.

Besides, the existing tools, allowing to execute database administrating from a

client’s computer use Services API for it, that isn’t supported by InterBase server Classic architecture. That means you may use third party products with server’s architecture SuperServer.

2.9. The repairing of corrupted database

Let’s suppose there are some errors in our database. Firstly, we have to check the existence of these errors; secondly, we have to try to correct these errors. You should abide the following instructions.

You should stop the InterBase server if it’s still working and make a copy of file or database files. All the restore activities should be performed only with database copy, because the chosen way may lead to unfortunate result, and you’ll have to restart a restore procedure (from a starting point). After creating a copy we’ll perform the whole database validation (checking fragments of records).

We should execute the following command for it:

gfix –v – full corruptbase gdb –user SYSDBA - password

In this case corruptbase.gdb – is a copy of damaged database. A command will

check database for any structure corruption and give the list of unsolved problems. If such errors are detected, we’ll have to delete the damaged data and get ready for backup/restore using the following command:

gfix –mend –user SYSDBA –password your_masterkey corruptbase gdb

After committing a command you should check if there are some errors in database left. You must run gfix with options –v –full for it, and when the process is over, perform database backup:

gbak –b –v -ig –user SYSDBA –password corruptbase.gdb corruptbase.gbk

This command will perform database backup (option - b says about it) and we’ll get detailed information about backup process executing (option –v). Error regarding to checksums will be ignored (option - ig) If you want to know more information about the options of command line toolgbak, you can find it in the chapter “Backup and restore” If there are some errors with backup, you should start it in another configuration:

gbak –b –v –ig -g –user SYSDBA –password corruptbase.gdb

corruptbase.gbk

Where option – g will switch off garbage collection during backup. If often helps to solve a problem with backup.

Also it may be possible to make a backup of database, if before it we set database in read-only mode. This mode prevents from writing any modifications to database and sometimes helps to perform backup of damaged database. For setting database to read-only mode, you should use the following command: gfix –m read _only

–user SYSDBA –password masterkey Disk:\Path\file.gdb

After it you should try again to perform database backup using the parameters given above.

If backup was done successfully, you should restore the database from backup

copy. You should use the following command:

gbak –c –user SYSDBA –password masterkey Disk:\Path\backup.gbk

Disk:\Path\newbase,gdb

When you are restoring the database, you may have some problems, especially

when creating the indexes. In this case options –inactive and -one_at_a_time should be added to restore command. These options deactivate indexes in creating from database backup and commit data confirmation for every table.

2.10. How you can try to extract the data from a corrupted database

It is possible that the operations given above will not lead to database recovery.

It means that database is seriously damaged or it cannot be restored as a single

whole, or a great number of efforts must be made for it is recovery. For example, one can execute a modification of system metadata, use non-documented functions and so on. It is a very hard, long-lasting and ungrateful work with doubtful chances for success. And if it is possible, try to evade it and use other methods. If a damaged database opens and allows to perform reading and modification operations with some data, you should use this possibility and save the data by copying them to a new base, and “ say god-bye” to the old one for good.

So, before transferring the data from the old database, it’s necessary to create a abase destination. If database hasn’t been changed for a long time, then you can use the old backup, from which metadata can be extracted for creating a database destination. On a basis of these metadata one has to create a data destination and start copying the data. The main task is to extract the data from a damaged database. Then we’ll have to allocate the data in a new base, but it’s not very difficult, even if we’ll have to restore database structure from memory. When extracting data from tables, you should use the following algorithm of operations:

- At first you should try to execute SELECT* from table N. If it went normallyyou could save the data you’ve got in the external source. It’s better to store data in script (almost all GUI give this function), if only the table doesn’t contain BLOB-fields. If there are BLOB-fields in the table, then data from them should be saved to another database by client program that will play a role of a mediator.Maybe you’ll have to write this trivial program especially for data recovery aims.

- If you failed to retrieve all data, you should delete all the indexes and try again. Virtually, indexes can be deleted from all the tables from the beginning of restoring, because they won’t be needed any more. Of course, if you don’t have a structure of metadata, same to the corrupted, it’s necessary to input a protocol of all operations that you are doing with a damaged database-source.

- If you don’t manage to read all the data from the table after deleting the indexes, one can try to do range query by primary key. It means to choose definite range of data. For example:

here is a primary key. InterBase has page data organization and that’s why range query of values may be rather effective, although it seems to be something like shamanism. Nevertheless it works because we can expel data from query from damaged pages and read fortunately the other ones. You can recall our thesis that there is no defined order of storing records in SQL. Really, nobody guarantees that not an ordered query during restarts will return the records in the same order, but nevertheless physical records are stored within the database in defined internal order. It’s obviously that server will not mix the records just for abiding SQL-standard. One can try to use this internal order extracting data from damaged database (if you want to know more information about data pages and their correlations, then look chapter “Structure of InterBase database”).

Vitaliy Barmin, one of the experienced Russian InterBase-developers reported that in this way he managed to restore up to 98% information from unrecoverable database (there were a great number of damaged pages). Thus, data from a damaged database must be moved to a new database or into external sources like SQL-scripts. When you copy the data, pay attention to generators values in damaged database (they must be saved for restarting a proper work in new database. If you don’t have a complete copy of metadata, you should extract the texts of stored procedures, triggers, constraints and definition of indexes.

2.11. Restoring of hopeless database

In general, restoring of database can be very troublesome and difficult and that’s why it’s better to make a backup copy of database than restore the damaged data and whatever happened, you shouldn’t get despaired because a solution can be found in the most difficult situations. And now we’ll consider 2 cases.

The first case (a classic problem). A backup that can’t be restored because of having NULL values in the column with constraints NOT NULL (restore process was run over the working file). The working file was erased and restore process was interrupted because of error. And as a result of thoughtless actions we got a great number of useless data (that can’t be restored) instead of backup copy. But the solution was found. The programmer managed to recollect what table and what column had constraints NOT NULL. Backup file was loaded to hexadecimal editor. And a combination of bytes, corresponded to definition of this column, was found there by searching. After innumerous experiments it turned out that constraint NOT NULL adds 1 somewhere near the column name. In HEX-editor this “1” was corrected to “0” and backup copy was restored. After that case programmer memorized once and for all how to execute backup process and restore.

The second case. The situation was catastrophic. Database corrupted on the phase of extension because of lack of disk space. When increasing the database size, server creates series of critically important pages (for example, transaction inventory page and page inventory page, additional pages for RDB$Pages relation) and writes them down to the end of database As a result, database didn’t open either by administration facilities or by utility GBAK. And when we tried to connect database, error message (“Unexpected end of file”) appeared.

When we run utility gfix strange things were happening: The program was working in an endless cycle. When gfix was working, server was writing errors to log (file InterBase log) with high speed (around 100 Kb per second). As a result, log file filled all the free disk space very quickly. We even had to write a program that erased this log by timer. This process lasted for a long time – gfix was working for more than 16 hours without any results. Log was filled up with errors of the following view: “Page XXX doubly allocated”. In starting InterBase sourses (in file val.#) there is a short description of this error. It says that this error appears when the same data page is used twice. It’s obviously that this error is a result of corruption of critically important pages.

As a result, after several days of unfortunate experiments attempts to restore the data in standard ways were left. And that’s why we had to use low-level analysis of data stored in damaged database.

Alexander Kozelskiy, a chief of Information technologies department East View Publications Inc, is the author of the idea how to extract information from similar unrecoverable databases.

The method of restoring that we got as a result of researches was based on the fact that database has page organization and data from every table are collected by data pages. Every data page contains identifier of the table for which it stores data. It was especially important to restore data from several critical tables. There were data from the similar tables, received from an old backup copy that worked perfectly and could be a pattern. Database-pattern was loaded to editor of hexadecimal sources and then we searched for the patterns of those data that interested us. These data were copied to buffer in hexadecimal format and then remains of damaged database were loaded to the editor. A sequence of bytes corresponded to the pattern was found in damaged database, and page was analyzed (on which this sequence was found).

At first we defined the beginning page, but it wasn’t difficult because the sizeof

database file is divisible by data page size. A number of current byte divided by page size – 8192 bytes, approximates the result to integer (and got the number of current page). Then multiplied the number of current page-by-page size and got the number of byte corresponded to the beginning of current page. Having analyzed the header, we defined the type of page (for pages with data the type is 5 – look file ods.h from set of starting InterBase sources and also the chapter “The structure of InterBase Database”) as well as identifier of necessary table.

Then a program was written, that analyzed the whole database, collected all the pages for necessary table into one single piece and move it to file.

Thus, when we got the data we needed in first term, we started analyzing contents of selected pages. InterBase is widely using data compression for saving place. For example, a string like VARCHAR containing “ABC” string, it stores sequence of following values: string length (2 bytes), in our case it is 0003, and then symbols themselves and then checksum. We had to write analyzer of string as well as other database types that converted data from hexadecimal format into ordinary view. We managed to extract up to 80% of information from several critical tables using a “manual” method of analyzing database contents. Later on the basis of experience Oleg Kulkov and Alexey Kovyazin, one of the authors of this book, developed the utility InterBase Surgeon that performs direct access to database, bypassing the InterBase engine and allows to read directly and interpret the data within InterBase database in a proper way.

Using InterBase Surgeon, we manage to detect causes of corruption and restore up to 90% of absolutely unrecoverable databases that can’t be open by InterBase and restored by standard methods.

You can download this program from official program site www.ib-aid.com.

3. Thanks

I'd like to tender thanks to all who help me to create this guide:Craig Stuntz, Alexander Nevsky, Konstantin Sipachev, Tatjana Sipacheva and all other kind and knowledgeable people of InterBase and Firebird comminity.

If you have any suggestions or questions about this chapter, please feel free to email.

© 2002 AIexey Kovyazin, Serge Vostrikov.

Copyright © 2004 IBSurgeon Team. All rights reserved.

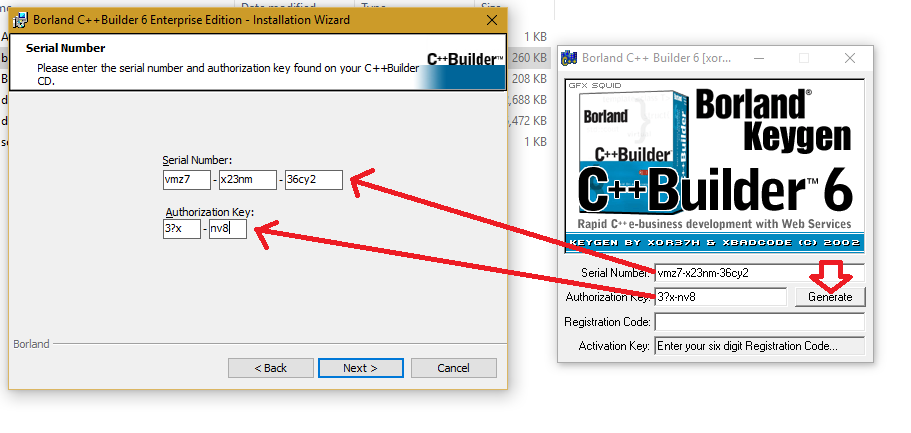

Borland Interbase 7.1 by TMiG crack keygen

All cracks and keygens are made by enthusiasts and professional reverse engineers

IMPORTANT NOTICE: All staff like keygens and crack files are made by IT university students from USA, Russia, North Korea and other countries. All the files were checked by professors and were fully verified for compatibility with Windows OS, MAC OS and *nix systems like Linux and Unix

Yes indeed, it is our loved John Travolta. He was born in sunny Gabon and his hobby was cracking and hacking.

He got his master degree in computer science at Massachusetts Institute of Technology and became one of the most popular reverse engineers.

Later he moved to Georgia and continued cracking software and at the age of 35 he finally cracked the protection system of Borland Interbase 7.1 by TMiG and made it available for download at KEYGENS.PRO

He was a fan of such great hackers as Hillary Clinton and Britney Spears. At the moment he teaches at Stanford University and doesn't forget about reversing art.

Fetching...done. Download Borland Interbase 7.1 by TMiG crack/keygen with serial number

It`s free and safe to use all cracks and keygens downloaded from KEYGENS.PRO So download Borland Interbase 7.1 by TMiG keygen then unzip it to any folder and run to crack the application. There are no viruses or any exploits on this site, you are on a crack server optimized for surfer.

Sometimes Antivirus software may give an alert while you are downloading or using cracks. In 99.909% percent of cases these alerts are false alerts.

You should know that viruses and trojans are created and distributed by the same corporations developing AntiVirus software, they just create a job for themselves. The same problem may occur when you download Borland Interba... product keygens. Again, just relax and ignore it.

The time of download page generation is more than zero seconds. Use downloaded crack staff and have a fun, but if you like the software in subject - buy it ;)...don't use cracks.

This site is running on UNIX FreeBSD machine. It is a state of the art operating system that is under BSD license and is freeware. Don't waste your time with shitty windows applications, use real staff and be cool :)

What’s New in the Borland Interbase 7.1 serial key or number?

Screen Shot

System Requirements for Borland Interbase 7.1 serial key or number

- First, download the Borland Interbase 7.1 serial key or number

-

You can download its setup from given links: