X<>Pose IT 1.0 serial key or number

X<>Pose IT 1.0 serial key or number

Objective-C

| Paradigm | Reflective, class-basedobject-oriented |

|---|---|

| Family | C |

| Designed&#;by | Tom Love and Brad Cox |

| First&#;appeared | ; 36&#;years ago&#;() |

| Stable release | |

| Typing discipline | static, dynamic, weak |

| OS | Cross-platform |

| Filename extensions | .h, .m, .mm, .M |

| Website | manicapital.com |

| Major implementations | |

| Clang, GCC | |

| Influenced by | |

| C, Smalltalk | |

| Influenced | |

| Groovy, Java, Nu, Objective-J, TOM, Swift[2] | |

Objective-C is a general-purpose, object-orientedprogramming language that adds Smalltalk-style messaging to the C programming language. It was the main programming language supported by Apple for macOS, iOS, and their respective application programming interfaces (APIs), Cocoa and Cocoa Touch, until the introduction of Swift in [3]

The language was originally developed in the early s. It was later selected as the main language used by NeXT for its NeXTSTEP operating system, from which macOS and iOS are derived.[4] Portable Objective-C programs that do not use Apple libraries, or those using parts that may be ported or reimplemented for other systems, can also be compiled for any system supported by GNU Compiler Collection (GCC) or Clang.

Objective-C source code 'implementation' program files usually have .m filename extensions, while Objective-C 'header/interface' files have .h extensions, the same as C header files. Objective-C++ files are denoted with a .mm file extension.

History[edit]

Objective-C was created primarily by Brad Cox and Tom Love in the early s at their company Productivity Products International.[5]

Leading up to the creation of their company, both had been introduced to Smalltalk while at ITT Corporation's Programming Technology Center in The earliest work on Objective-C traces back to around that time.[6] Cox was intrigued by problems of true reusability in software design and programming. He realized that a language like Smalltalk would be invaluable in building development environments for system developers at ITT. However, he and Tom Love also recognized that backward compatibility with C was critically important in ITT's telecom engineering milieu.[7]

Cox began writing a pre-processor for C to add some of the abilities of Smalltalk. He soon had a working implementation of an object-oriented extension to the C language, which he called "OOPC" for Object-Oriented Pre-Compiler.[8] Love was hired by Schlumberger Research in and had the opportunity to acquire the first commercial copy of Smalltalk, which further influenced the development of their brainchild. In order to demonstrate that real progress could be made, Cox showed that making interchangeable software components really needed only a few practical changes to existing tools. Specifically, they needed to support objects in a flexible manner, come supplied with a usable set of libraries, and allow for the code (and any resources needed by the code) to be bundled into one cross-platform format.

Love and Cox eventually formed PPI to commercialize their product, which coupled an Objective-C compiler with class libraries. In , Cox published the main description of Objective-C in its original form in the book Object-Oriented Programming, An Evolutionary Approach. Although he was careful to point out that there is more to the problem of reusability than just what Objective-C provides, the language often found itself compared feature for feature with other languages.

Popularization through NeXT[edit]

In , NeXT licensed Objective-C from StepStone (the new name of PPI, the owner of the Objective-C trademark) and extended the GCC compiler to support Objective-C. NeXT developed the AppKit and Foundation Kit libraries on which the NeXTSTEP user interface and Interface Builder were based. While the NeXT workstations failed to make a great impact in the marketplace, the tools were widely lauded in the industry. This led NeXT to drop hardware production and focus on software tools, selling NeXTSTEP (and OpenStep) as a platform for custom programming.

In order to circumvent the terms of the GPL, NeXT had originally intended to ship the Objective-C frontend separately, allowing the user to link it with GCC to produce the compiler executable. After being initially accepted by Richard M. Stallman, this plan was rejected after Stallman consulted with GNU's lawyers and NeXT agreed to make Objective-C part of GCC.[9]

The work to extend GCC was led by Steve Naroff, who joined NeXT from StepStone. The compiler changes were made available as per GPL license terms, but the runtime libraries were not, rendering the open source contribution unusable to the general public. This led to other parties developing such runtime libraries under open source license. Later, Steve Naroff was also principal contributor to work at Apple to build the Objective-C frontend to Clang.

The GNU project started work on its free software implementation of Cocoa, named GNUstep, based on the OpenStep standard.[10] Dennis Glatting wrote the first GNU Objective-C runtime in The GNU Objective-C runtime, which has been in use since , is the one developed by Kresten Krab Thorup when he was a university student in Denmark.[citation needed] Thorup also worked at NeXT from to [11]

Apple development and Swift[edit]

After acquiring NeXT in , Apple Computer used OpenStep in its then-new operating system, Mac OS X. This included Objective-C, NeXT's Objective-C-based developer tool, Project Builder, and its interface design tool, Interface Builder, both now merged into one application, Xcode. Most of Apple's current Cocoa API is based on OpenStep interface objects and is the most significant Objective-C environment being used for active development.

At WWDC , Apple introduced a new language, Swift, which was characterized as "Objective-C without the C".

Syntax[edit]

Objective-C is a thin layer atop C and is a "strict superset" of C, meaning that it is possible to compile any C program with an Objective-C compiler and to freely include C language code within an Objective-C class.[12][13][14][15][16][17]

Objective-C derives its object syntax from Smalltalk. All of the syntax for non-object-oriented operations (including primitive variables, pre-processing, expressions, function declarations, and function calls) are identical to those of C, while the syntax for object-oriented features is an implementation of Smalltalk-style messaging.

Messages[edit]

The Objective-C model of object-oriented programming is based on message passing to object instances. In Objective-C one does not call a method; one sends a message. This is unlike the Simula-style programming model used by C++. The difference between these two concepts is in how the code referenced by the method or message name is executed. In a Simula-style language, the method name is in most cases bound to a section of code in the target class by the compiler. In Smalltalk and Objective-C, the target of a message is resolved at runtime, with the receiving object itself interpreting the message. A method is identified by a selector or SEL — a unique identifier for each message name, often just a NUL-terminated string representing its name — and resolved to a C method pointer implementing it: an IMP.[18] A consequence of this is that the message-passing system has no type checking. The object to which the message is directed — the receiver — is not guaranteed to respond to a message, and if it does not, it raises an exception.[19]

Sending the message method to the object pointed to by the pointer obj would require the following code in C++:

In Objective-C, this is written as follows:

The "method" call is translated by the compiler to the objc_msgSend(id self, SEL op, ) family of runtime functions. Different implementations handle modern additions like super.[20] In GNU families this function is named objc_msg_sendv, but it has been deprecated in favor of a modern lookup system under objc_msg_lookup.[21]

Both styles of programming have their strengths and weaknesses. Object-oriented programming in the Simula (C++) style allows multiple inheritance and faster execution by using compile-time binding whenever possible, but it does not support dynamic binding by default. It also forces all methods to have a corresponding implementation unless they are abstract. The Smalltalk-style programming as used in Objective-C allows messages to go unimplemented, with the method resolved to its implementation at runtime. For example, a message may be sent to a collection of objects, to which only some will be expected to respond, without fear of producing runtime errors. Message passing also does not require that an object be defined at compile time. An implementation is still required for the method to be called in the derived object. (See the dynamic typing section below for more advantages of dynamic (late) binding.)

Interfaces and implementations[edit]

Objective-C requires that the interface and implementation of a class be in separately declared code blocks. By convention, developers place the interface in a header file and the implementation in a code file. The header files, normally suffixed .h, are similar to C header files while the implementation (method) files, normally suffixed .m, can be very similar to C code files.

Interface[edit]

This is analogous to class declarations as used in other object-oriented languages, such as C++ or Python.

The interface of a class is usually defined in a header file. A common convention is to name the header file after the name of the class, e.g. Ball.h would contain the interface for the class Ball.

An interface declaration takes the form:

In the above, plus signs denote class methods, or methods that can be called on the class itself (not on an instance), and minus signs denote instance methods, which can only be called on a particular instance of the class. Class methods also have no access to instance variables.

The code above is roughly equivalent to the following C++ interface:

Note that instanceMethod2With2Parameters:param2_callName: demonstrates the interleaving of selector segments with argument expressions, for which there is no direct equivalent in C/C++.

Return types can be any standard C type, a pointer to a generic Objective-C object, a pointer to a specific type of object such as NSArray *, NSImage *, or NSString *, or a pointer to the class to which the method belongs (instancetype). The default return type is the generic Objective-C type id.

Method arguments begin with a name labeling the argument that is part of the method name, followed by a colon followed by the expected argument type in parentheses and the argument name. The label can be omitted.

A derivative of the interface definition is the category, which allows one to add methods to existing classes.[22]

Implementation[edit]

The interface only declares the class interface and not the methods themselves: the actual code is written in the implementation file. Implementation (method) files normally have the file extension , which originally signified "messages".[23]

Methods are written using their interface declarations. Comparing Objective-C and C:

The syntax allows pseudo-naming of arguments.

Internal representations of a method vary between different implementations of Objective-C. If myColor is of the class Color, instance method -changeColorToRed:green:blue: might be internally labeled _i_Color_changeColorToRed_green_blue. The i is to refer to an instance method, with the class and then method names appended and colons changed to underscores. As the order of parameters is part of the method name, it cannot be changed to suit coding style or expression as with true named parameters.

However, internal names of the function are rarely used directly. Generally, messages are converted to function calls defined in the Objective-C runtime library. It is not necessarily known at link time which method will be called because the class of the receiver (the object being sent the message) need not be known until runtime.

Instantiation[edit]

Once an Objective-C class is written, it can be instantiated. This is done by first allocating an uninitialized instance of the class (an object) and then by initializing it. An object is not fully functional until both steps have been completed. These steps should be accomplished with one line of code so that there is never an allocated object that hasn't undergone initialization (and because it is unwise to keep the intermediate result since can return a different object than that on which it is called).

Instantiation with the default, no-parameter initializer:

Instantiation with a custom initializer:

In the case where no custom initialization is being performed, the "new" method can often be used in place of the alloc-init messages:

Also, some classes implement class method initializers. Like , they combine and , but unlike , they return an autoreleased instance. Some class method initializers take parameters:

The alloc message allocates enough memory to hold all the instance variables for an object, sets all the instance variables to zero values, and turns the memory into an instance of the class; at no point during the initialization is the memory an instance of the superclass.

The init message performs the set-up of the instance upon creation. The init method is often written as follows:

In the above example, notice the return type. This type stands for "pointer to any object" in Objective-C (See the Dynamic typing section).

The initializer pattern is used to assure that the object is properly initialized by its superclass before the init method performs its initialization. It performs the following actions:

- self = [super init]

- Sends the superclass instance an init message and assigns the result to self (pointer to the current object).

- if (self)

- Checks if the returned object pointer is valid before performing any initialization.

- return self

- Returns the value of self to the caller.

A non-valid object pointer has the value nil; conditional statements like "if" treat nil like a null pointer, so the initialization code will not be executed if [super init] returned nil. If there is an error in initialization the init method should perform any necessary cleanup, including sending a "release" message to self, and return nil to indicate that initialization failed. Any checking for such errors must only be performed after having called the superclass initialization to ensure that destroying the object will be done correctly.

If a class has more than one initialization method, only one of them (the "designated initializer") needs to follow this pattern; others should call the designated initializer instead of the superclass initializer.

Protocols[edit]

In other programming languages, these are called "interfaces".

Objective-C was extended at NeXT to introduce the concept of multiple inheritance of specification, but not implementation, through the introduction of protocols. This is a pattern achievable either as an abstract multiple inherited base class in C++, or as an "interface" (as in Java and C#). Objective-C makes use of ad hoc protocols called informal protocols and compiler-enforced protocols called formal protocols.

An informal protocol is a list of methods that a class can opt to implement. It is specified in the documentation, since it has no presence in the language. Informal protocols are implemented as a category (see below) on NSObject and often include optional methods, which, if implemented, can change the behavior of a class. For example, a text field class might have a delegate that implements an informal protocol with an optional method for performing auto-completion of user-typed text. The text field discovers whether the delegate implements that method (via reflection) and, if so, calls the delegate's method to support the auto-complete feature.

A formal protocol is similar to an interface in Java, C#, and Ada It is a list of methods that any class can declare itself to implement. Versions of Objective-C before required that a class must implement all methods in a protocol it declares itself as adopting; the compiler will emit an error if the class does not implement every method from its declared protocols. Objective-C added support for marking certain methods in a protocol optional, and the compiler will not enforce implementation of optional methods.

A class must be declared to implement that protocol to be said to conform to it. This is detectable at runtime. Formal protocols cannot provide any implementations; they simply assure callers that classes that conform to the protocol will provide implementations. In the NeXT/Apple library, protocols are frequently used by the Distributed Objects system to represent the abilities of an object executing on a remote system.

The syntax

denotes that there is the abstract idea of locking. By stating in the class definition that the protocol is implemented,

instances of NSLock claim that they will provide an implementation for the two instance methods.

Dynamic typing[edit]

Objective-C, like Smalltalk, can use dynamic typing: an object can be sent a message that is not specified in its interface. This can allow for increased flexibility, as it allows an object to "capture" a message and send the message to a different object that can respond to the message appropriately, or likewise send the message on to another object. This behavior is known as message forwarding or delegation (see below). Alternatively, an error handler can be used in case the message cannot be forwarded. If an object does not forward a message, respond to it, or handle an error, then the system will generate a runtime exception.[24] If messages are sent to nil (the null object pointer), they will be silently ignored or raise a generic exception, depending on compiler options.

Static typing information may also optionally be added to variables. This information is then checked at compile time. In the following four statements, increasingly specific type information is provided. The statements are equivalent at runtime, but the extra information allows the compiler to warn the programmer if the passed argument does not match the type specified.

In the above statement, foo may be of any class.

In the above statement, foo may be an instance of any class that conforms to the protocol.

In the above statement, foo must be an instance of the NSNumber class.

In the above statement, foo must be an instance of the NSNumber class, and it must conform to the protocol.

In Objective-C, all objects are represented as pointers, and static initialization is not allowed. The simplest object is the type that id (objc_obj *) points to, which only has an isa pointer describing its class. Other types from C, like values and structs, are unchanged because they are not part of the object system. This decision differs from the C++ object model, where structs and classes are united.

Forwarding[edit]

Objective-C permits the sending of a message to an object that may not respond. Rather than responding or simply dropping the message, an object can forward the message to an object that can respond. Forwarding can be used to simplify implementation of certain design patterns, such as the observer pattern or the proxy pattern.

The Objective-C runtime specifies a pair of methods in Object

- forwarding methods:-(retval_t)forward:(SEL)selargs:(arglist_t)args;// with GCC-(id)forward:(SEL)selargs:(marg_list)args;// with NeXT/Apple systems

- action methods:-(retval_t)performv:(SEL)selargs:(arglist_t)args;// with GCC-(id)performv:(SEL)selargs:(marg_list)args;// with NeXT/Apple systems

An object wishing to implement forwarding needs only to override the forwarding method with a new method to define the forwarding behavior. The action method performv:: need not be overridden, as this method merely performs an action based on the selector and arguments. Notice the type, which is the type of messages in Objective-C.

Note: in OpenStep, Cocoa, and GNUstep, the commonly used frameworks of Objective-C, one does not use the Object class. The - (void)forwardInvocation:(NSInvocation *)anInvocation method of the NSObject class is used to do forwarding.

Example[edit]

Here is an example of a program that demonstrates the basics of forwarding.

- Forwarder.h

- Forwarder.m

- Recipient.h

- Recipient.m

- main.m

Notes[edit]

When compiled using gcc, the compiler reports:

$ gcc -x objective-c -Wno-import Forwarder.m Recipient.m main.m -lobjc main.m: In function `main': main.m warning: `Forwarder' does not respond to `hello' $The compiler is reporting the point made earlier, that Forwarder does not respond to hello messages. In this circumstance, it is safe to ignore the warning since forwarding was implemented. Running the program produces this output:

$ ./manicapital.com Recipient says hello!Categories[edit]

During the design of Objective-C, one of the main concerns was the maintainability of large code bases. Experience from the structured programming world had shown that one of the main ways to improve code was to break it down into smaller pieces. Objective-C borrowed and extended the concept of categories from Smalltalk implementations to help with this process.[25]

Furthermore, the methods within a category are added to a class at run-time. Thus, categories permit the programmer to add methods to an existing class - an open class - without the need to recompile that class or even have access to its source code. For example, if a system does not contain a spell checker in its String implementation, it could be added without modifying the String source code.

Methods within categories become indistinguishable from the methods in a class when the program is run. A category has full access to all of the instance variables within the class, including private variables.

If a category declares a method with the same method signature as an existing method in a class, the category's method is adopted. Thus categories can not only add methods to a class, but also replace existing methods. This feature can be used to fix bugs in other classes by rewriting their methods, or to cause a global change to a class's behavior within a program. If two categories have methods with the same name but different method signatures, it is undefined which category's method is adopted.

Other languages have attempted to add this feature in a variety of ways. TOM took the Objective-C system a step further and allowed for the addition of variables also. Other languages have used prototype-based solutions instead, the most notable being Self.

The C# and Visual manicapital.com languages implement superficially similar functionality in the form of extension methods, but these lack access to the private variables of the class.[26]Ruby and several other dynamic programming languages refer to the technique as "monkey patching".

Logtalk implements a concept of categories (as first-class entities) that subsumes Objective-C categories functionality (Logtalk categories can also be used as fine-grained units of composition when defining e.g. new classes or prototypes; in particular, a Logtalk category can be virtually imported by any number of classes and prototypes).

Example use of categories[edit]

This example builds up an Integer class, by defining first a basic class with only accessor methods implemented, and adding two categories, Arithmetic and Display, which extend the basic class. While categories can access the base class's private data members, it is often good practice to access these private data members through the accessor methods, which helps keep categories more independent from the base class. Implementing such accessors is one typical use of categories. Another is to use categories to add methods to the base class. However, it is not regarded as good practice to use categories for subclass overriding, also known as monkey patching. Informal protocols are implemented as a category on the base NSObject class. By convention, files containing categories that extend base classes will take the name BaseClass+ExtensionClass.h.

- Integer.h

- Integer.m

- Integer+Arithmetic.h

- Integer+Arithmetic.m

- Integer+Display.h

- Integer+Display.m

- main.m

Notes[edit]

Compilation is performed, for example, by:

gcc -x objective-c main.m Integer.m Integer+Arithmetic.m Integer+Display.m -lobjcOne can experiment by leaving out the #import "Integer+Arithmetic.h" and [num1 add:num2] lines and omitting Integer+Arithmetic.m in compilation. The program will still run. This means that it is possible to mix-and-match added categories if needed; if a category does not need to have some ability, it can simply not be compile in.

Posing[edit]

Objective-C permits a class to wholly replace another class within a program. The replacing class is said to "pose as" the target class.

Class posing was declared deprecated with Mac OS X v, and is unavailable in the bit runtime. Similar functionality can be achieved by using method swizzling in categories, that swaps one method's implementation with another's that have the same signature.

For the versions still supporting posing, all messages sent to the target class are instead received by the posing class. There are several restrictions:

- A class may only pose as one of its direct or indirect superclasses.

- The posing class must not define any new instance variables that are absent from the target class (though it may define or override methods).

- The target class may not have received any messages prior to the posing.

Posing, similarly with categories, allows global augmentation of existing classes. Posing permits two features absent from categories:

- A posing class can call overridden methods through super, thus incorporating the implementation of the target class.

- A posing class can override methods defined in categories.

For example,

This intercepts every invocation of setMainMenu to NSApplication.

#import[edit]

In the C language, the pre-compile directive always causes a file's contents to be inserted into the source at that point. Objective-C has the directive, equivalent except that each file is included only once per compilation unit, obviating the need for include guards.

Linux Gcc Compilation[edit]

Other features[edit]

Objective-C's features often allow for flexible, and often easy, solutions to programming issues.

- Delegating methods to other objects and remote invocation can be easily implemented using categories and message forwarding.

- Swizzling of the pointer allows for classes to change at runtime. Typically used for debugging where freed objects are swizzled into zombie objects whose only purpose is to report an error when someone calls them. Swizzling was also used in Enterprise Objects Framework to create database faults.[citation needed] Swizzling is used today by Apple's Foundation Framework to implement Key-Value Observing.

Language variants[edit]

Objective-C++[edit]

Objective-C++ is a language variant accepted by the front-end to the GNU Compiler Collection and Clang, which can compile source files that use a combination of C++ and Objective-C syntax. Objective-C++ adds to C++ the extensions that Objective-C adds to C. As nothing is done to unify the semantics behind the various language features, certain restrictions apply:

- A C++ class cannot derive from an Objective-C class and vice versa.

- C++ namespaces cannot be declared inside an Objective-C declaration.

- Objective-C declarations may appear only in global scope, not inside a C++ namespace

- Objective-C classes cannot have instance variables of C++ classes that lack a default constructor or that have one or more virtual methods,[citation needed] but pointers to C++ objects can be used as instance variables without restriction (allocate them with new in the -init method).

- C++ "by value" semantics cannot be applied to Objective-C objects, which are only accessible through pointers.

- An Objective-C declaration cannot be within a C++ template declaration and vice versa. However, Objective-C types (e.g., ) can be used as C++ template parameters.

- Objective-C and C++ exception handling is distinct; the handlers of each cannot handle exceptions of the other type. As a result, object destructors are not run. This is mitigated in recent "Objective-C " runtimes as Objective-C exceptions are either replaced by C++ exceptions completely (Apple runtime), or partly when Objective-C++ library is linked (GNUstep libobjc2).[27]

- Objective-C blocks and C++11 lambdas are distinct entities. However, a block is transparently generated on macOS when passing a lambda where a block is expected.[28]

Objective-C [edit]

At the Worldwide Developers Conference, Apple announced the release of "Objective-C ," a revision of the Objective-C language to include "modern garbage collection, syntax enhancements,[29] runtime performance improvements,[30] and bit support". Mac OS X v, released in October , included an Objective-C compiler. GCC supports many new Objective-C features, such as declared and synthesized properties, dot syntax, fast enumeration, optional protocol methods, method/protocol/class attributes, class extensions, and a new GNU Objective-C runtime API.[31]

The naming Objective-C represents a break in the versioning system of the language, as the last Objective-C version for NeXT was "objc4".[32] This project name was kept in the last release of legacy Objective-C runtime source code in Mac OS X Leopard ().[33]

Garbage collection[edit]

Objective-C provided an optional conservative, generational garbage collector. When run in backwards-compatible mode, the runtime turned reference counting operations such as "retain" and "release" into no-ops

Rule-based approach to recognizing human body poses and gestures in real time

Abstract

In this paper we propose a classifier capable of recognizing human body static poses and body gestures in real time. The method is called the gesture description language (GDL). The proposed methodology is intuitive, easily thought and reusable for any kind of body gestures. The very heart of our approach is an automated reasoning module. It performs forward chaining reasoning (like a classic expert system) with its inference engine every time new portion of data arrives from the feature extraction library. All rules of the knowledge base are organized in GDL scripts having the form of text files that are parsed with a LALR-1 grammar. The main novelty of this paper is a complete description of our GDL script language, its validation on a large dataset (1, recorded movement sequences) and the presentation of its possible application. The recognition rate for examined gestures is within the range of – %. We have also implemented an application that uses our method: it is a three-dimensional desktop for visualizing 3D medical datasets that is controlled by gestures recognized by the GDL module.

Introduction

Nearly all contemporary home and mobile computers are equipped with build-in cameras and video capture multimedia devices. Because of that, there is a heavy demand for applications that utilize these sensors. One possible field of application is natural user interfaces (NI). The NI is a concept of human-device interaction based on human senses, mostly focused on hearing and vision. In the case of video data, NI allows user to interact with a computer by giving gesture- and pose-based commands. To recognize and interpret these instructions, proper classification methods have to be applied. The basic approach to gesture recognition is to formulate this problem as a time varying signals analysis. There are many approaches to complete this task. The choice of the optimal method depends on time sequence features we are dealing with.

The common approach to gesture recognition is a statistic/probability-based approach. The strategy for hand posture classification from [1] involves the training of the random forest with a set of hand data. For each posture, authors collected 10, frames of data in several orientations and distances from the camera, resulting in a total of 30, frames. For each posture, they randomly sample a proportion of frames for training, and the rest are used for testing. The random forests classifier is also used in [2] to map local segment features to their corresponding prediction histograms. Paper [3] describes GestureLab, a tool designed for building domain-specific gesture recognizers, and its integration with Cider, a grammar engine that uses GestureLab recognizers and parses visual languages. Recognizers created with GestureLab perform probabilistic lexical recognition with disambiguation occurring during the parsing based on contextual syntactic information. In [4], linear discriminant analysis is used for posture classification. Paper [5] presents the experimental results for Gaussian process dynamical model against a database of 66 hand gestures from the Malaysian sign language. Furthermore, the Gaussian process dynamical model is tested against the established hidden Markov model for a comparative evaluation. In [6], observed users’ actions are modeled as a set of weighted dynamic systems associated with different model variables. Time-delay embeddings are used in a time series resulting from the evolution of model variables over time to reconstruct phase portraits of appropriate dimensions. Proposed distances are used to compare trajectories within the reconstructed phase portraits. These distances are used to train support vector machine models for action recognition. In paper [7], the authors describe a system for recognizing various human actions from compressed video based on motion history information. The notion of quantifying the motion involved, through the so-called motion flow history (MFH), is introduced. The encoded motion information, readily available in the compressed MPEG stream, is used to construct the coarse motion history image (MHI) and the corresponding MFH. The features extracted from the static MHI and MFH briefly characterize the spatio-temporal and motion vector information of the action. The extracted features are used to train the KNN, neural network, SVM and Bayes classifiers to recognize a set of seven human actions. Paper [8] proposes a novel activity recognition approach in which the authors decompose an activity into multiple interactive stochastic processes, each corresponding to one scale of motion details. For modeling the interactive processes, they present a hierarchical durational-state dynamic Bayesian network.

Gestures might also be recognized using a neural network and fuzzy sets. The system [9] uses fuzzy neural networks to transform the preprocessed data of the detected hand into a fuzzy hand-posture feature model. Based on this model, the developed system determines the actual hand posture by applying fuzzy inference. Finally, the system recognizes the hand gesture of the user from the sequence of detected hand postures. Moreover, computer vision techniques are developed to recognize dynamic hand gestures and make interpretations in the form of commands or actions. In [10], a fuzzy glove provides a linguistic description of the current hand posture given by nine linguistic variables. Each linguistic variable takes values that are fuzzy subsets of a lexical set. From this linguistic description, it must be evaluated if the current posture is one of the hand postures to be recognized.

An alternative approach was proposed in the full body interaction framework (FUBI) which is a framework for recognizing full body gestures and postures in real time from the data of an OpenNI-applicable depth sensor, especially the Microsoft Kinect sensor [11, 12]. FUBI recognizes four categories of posture and gestures: static postures, gestures with linear movement, a combination of postures and linear movement and complex gestures. The fourth type of gestures is recognized using $1 recognizer algorithm which is a geometric template matcher [13]. In [14], to recognize actions, the authors also make use of the fact that each gesture requires a player to move his/her hand between an origin and a destination, along a given trajectory connecting any two given areas within the sensible area. The idea behind the proposed algorithm is to track the extreme point of each hand, while verifying that this point starts its motion from the origin of a given action, completes it in compliance with the destination, and traverses a set of checkpoints set along the chosen trajectory. This scheme can be applied to trajectories of either a linear or a circular shape.

The semantic approach to gesture recognition has a long tradition. Paper [15] presents a structured approach to studying patterns of a multimodal language in the context of a 2D-display control. It describes a systematic analysis of gestures from observable kinematical primitives to their semantics as pertinent to a linguistic structure. The proposed semantic classification of co-verbal gestures distinguishes six categories based on their spatio-temporal deixis. Papers [16] and [17] propose a two-level approach to solve the problem of the real-time vision-based hand gesture classification. The lower level of this approach implements the posture recognition with Haar-like features and the AdaBoost learning algorithm. With this algorithm, real-time performance and high recognition accuracy can be obtained. The higher level implements the linguistic hand gesture recognition using a context-free grammar-based syntactic analysis. Given an input gesture, based on the extracted postures, composite gestures can be parsed and recognized with a set of primitives and production rules. In [18], the authors propose a vision-based system for automatically interpreting a limited set of dynamic hand gestures. This involves extracting the temporal signature of the hand motion from the performed gesture. The concept of motion energy is used to estimate the dominant motion from an image sequence. To achieve the desired result, the concept of modeling the dynamic hand gesture using a finite state machine is utilized. The temporal signature is subsequently analyzed by the finite state machine to automatically interpret the performed gesture.

Nowadays, multimedia NI controllers can be purchased at a relatively low cost and we can clearly see a growing number of scientific publications about, and industry applications of this technology. The natural user interfaces have many commercial applications—not only in games or entertainment. One of the most promising domains is the realm of medical treatment and rehabilitation. Paper [19] reports using open-source software libraries and image processing techniques which implement a hand tracking and gesture recognition system based on the Kinect device that enables a surgeon to successfully, touchlessly navigate within an image in the intraoperative setting through a personal computer. In [20], a validation of the Microsoft Kinect for the assessment of postural control was tested. These findings suggest that the Microsoft Kinect sensor can validly assess kinematic strategies of postural control. Study [21] assessed the possibility of rehabilitating two young adults with motor impairments using a Kinect-based system in a public school setting. Data showed that the two participants significantly increased their motivation for physical rehabilitation, thus improving exercise performance during the intervention phases.

All approaches utilizing fully aromatized techniques of gestures classification either require very large training and validation sets (consisting of dozens or hundreds of cases) or have to be manually tuned, which might be very unintuitive even for a skilled system user. What is more, it is impossible to add any new gesture to be recognized without additional intensive training of the classifier. These three factors might significantly limit the potential application of these solutions in real-life development to institutions that are able to assemble very large pattern datasets. On the other hand, body gesture interpretation is totally natural to every person and—in our opinion—in many cases there is no need to employ a complex mathematical and statistical approach for the correct recognition. In fact, our research presented in this paper proves that it is possible to unambiguously recognize, in real time (online recognition), a set of static poses and body gestures (even those that have many common parts in trajectories) using forward chaining reasoning schema when sets of gestures are described with an “if-like” set of rules with the ability to detect time sequences.

We focus our efforts on developing an approach called a gesture description language (GDL) which is intuitive, easily considered and reusable for any kind of body gestures. The GDL was preliminarily described in our previous works [22, 23]. However, our earlier publications showed only the basic concept of this approach, without a detailed description of the methodology, without the validation and possible applications.

The main novelty of this paper is a complete description of our GDL language in the form of a LALR-1 grammar, its validation on a large dataset and the presentation of a possible application. We have implemented and tested our approach on a set of 1, user recordings and obtained very promising results—the recognition rate ranged from to %. What should be emphasized is that all errors were caused by the inaccuracy of the user tracking and segmentation algorithm (third party software) or by the low frame rate of recording. We did not exclude any recordings with aberrations of the user posture recognition because methods of that kind have to be verified in a real—not a ‘sterile laboratory’ environment. We have also implemented an application that uses our method—a three-dimensional desktop for volumetric medical dataset visualization which is controlled by gestures recognized by our approach.

Materials and methods

In this section, we have described third party software and libraries that we use to generate the test data for our new method. Later on, we introduce our novel classifier.

The body position features

In our research, we used the Microsoft Kinect sensor as the device for capturing and tracking human body movements. The body feature points that were utilized in our researches were the so-called skeleton joints. The segmentation of the human body from depth images and the skeleton tracking has been solved and reported in many papers [24–27] before. The joint video/depth rate allocation has also been researched [28]. In our solution, we have utilized the Prime Sensor NITE Algorithms which track 15 body joints as presented in Fig. 1.

Body joints are segmented from a depth image produced by a multimedia sensor. Each joint has three coordinates that describe its position in the right-handed coordinate system (note that left and right side in Fig. 1 are mirrored). The origin of this coordinate system is situated in the lens of the sensor’s depth camera. After detecting the skeleton joints, NITE tracks those features in real time, and this is crucial for the subsequent gesture recognition.

Our semantics in a rule-based approach with the GDL

Our approach is based on assumption that (nearly) every person has broad experience in how real-life (natural) gestures are performed by a typical person. Every individual gains this experience subconsciously through years of observation. Our goal was to propose an intuitive way of writing down these observations as a set of rules in a formal way (with some computer language scripts) and to generate a reasoning module that allows these scripts to be interpreted online (at the same speed at which the data arrives from the multimedia sensor). The schema of our approach is presented in Fig. 2. The input data for the recognition algorithm are a stream of body joints that arrives continuously from the data acquisition hardware and third party libraries. Our approach is preliminarily designed to use a set of body joint extracted by the NITE library, but it can be easily adapted to utilize any other joint set tracked by any of the previously mentioned libraries.

The very heart of our method is an automated reasoning module. It performs forward chaining reasoning (similar to that of a classic expert system) with its inference engine any time new portion of data arrives from the feature extraction library. All rules of the knowledge base are organized in GDL scripts which are simply text files that are parsed with a LALR-1 grammar. The input set of body joints and all conclusions obtained from knowledge base are put on the top of the memory heap. In addition, each level of the heap has it own timestamp which allows checking how much time has passed from one data acquisition to another. Because the conclusion of one rule might form the premise of another one, the satisfaction of a conclusion does not necessarily mean recognizing a particular gesture. The interpretation of a conclusion appearance depends on the GDL script code.

The detailed algorithm that leads from the obtained feature points to data recognition is as follow:

- 1)

Parse the input GDL script that consists set of rules, generate a parsed tree.

- 2)

Start the data capture module.

- 3)

Repeat the below instructions until the application is stopped:

- a.

If new data (a set of body feature points) arrive from the data capture algorithm, store it on the top of the memory heap with the current timestamp. Each level of the memory heap contains two types of data: a set of feature points and a set of rule names that were satisfied for the current/previously captured feature points and the current/previously satisfied rules. Feature points and names of rules satisfied in previous iterations lie on memory heap layers corresponding to a particular previous iteration. The size of the memory heap in our implementation is layers (5 s of data capture at the frequency of 30 Hz). We did not find a “deeper” heap useful, but because data stored in the heap are not memory consuming, the heap might be implemented as “virtually unlimited in depth”.

- b.

Check if values of those new points satisfy any one rule in the memory heap whose name is not present in the top level of the memory heap. The GDL script may also consider feature points from previous captures; for example torso.x[0] is the actual x-coordinate of the torso joint while torso.x[2] is the x-coordinate of the same joint but captured two iterations before—two levels below from the top position of the memory heap (see Examples 1, 2). Rule truth might also depend on the current (see Examples 3–5) and previously satisfied rules (see Example 6).

- c.

If any rule is satisfied, add its name to the top of the memory heap at same layer at which the last captured feature points were stored (top level of heap). As each level of the heap has its timestamp, it is possible to check if some rule was satisfied no earlier than a given period of time ago. It is simply done by searching the memory heap starting from the top (and then descending) until the rule name is found in some heap level or you get to before the given time period. Thanks to this mechanism, it is possible to check if the particular sequence of body joint positions (represented by rule names) appeared in the given time period. The sequence of body positions defines the gesture that we want to recognize (see Example 6).

- d.

If the name of the rule was added to memory heap in step ‘c’ of the algorithm, go to step ‘b’. If no new rule name was added, return to step ‘3’ and wait for new data to arrive.

- a.

In the next paragraph we present GDL scripts examples with commentaries that clarify most of possible syntax constructions and the algorithm’s flow.

GDL script specification

In this section we will formally define the GDL script language that is used to create the knowledge base for the inferring engine. In GDL, the letter case does not matter. The GDL script is a set of rules. Each rule might have an unlimited number of premises that are connected by conjunction or alternative operators. In GDL, premises are called logical rules. A logical rule can take two values: true or false. Apart from logical rules, the GDL script also contains numeric rules (3D numeric rules) which are simply some mathematical operations that return floating-point values (or floating three-dimensional points). A numeric rule might become a logical rule after it is combined with another numeric rule by a relational operator. The brackets in logical and numeric (3D) rules are used to change the order in which instructions are executed.

Another very important part of our approach consists in predefined variables (“Appendix 1”, terminal symbols “Body parts”) that return the value of body joint coordinates. It is possible to take not only the current position of a joint but also any previous position found in the memory heap. This is done by supplying a predefined variable name with a memory heap index (0 is the top of the heap, 1 is one level below the top, etc.). There is also a set of functions, which returns either numerical or logical values. For example, the following function checks if the Euclidean distance between the current position of the torso (x, y and z coordinate) and the previous position stored one heap level below is greater than 10 mm. If so, the rule is satisfied and the conclusion ‘Moving’ is added to the memory heap at the level 0. This means that the GDL recognized the movement of the observed user.

Example 1:

Figure 3 shows how motion can be mapped with a parsed rule from Example 1

The same rule might be rewritten as:

Example 2:

The more complex rule which detects the so-called “PSI pose” (see Fig. 5, second image from the right in the first row) is presented below:

Example 3:

The first rule checks if the right elbow and the right hand are situated to the right of the torso, if the right hand is above the right elbow and if the vertical coordinates of the right hand and the right elbow are no more than 50 mm different. The last part of the rule is the premise that checks if the horizontal coordinates of the right shoulder and the right elbow are no more than 50 mm different. The second rule is similar to first one, but it describes the left arm, shoulder and elbow. The last rule checks if both previous rules are satisfied. This is done by checking the logical conjunction of both previous conclusions.

The above approach works fine when the user is standing perpendicular to the camera plane facing the camera, however, it is possible to redefine the set of rules to make the right prediction when the camera is at an angle. To do so, the GDL script introduces the ability to compute the angle between two given vectors in a three-dimensional space using the angle() function (see Example 4). The function takes two parameters that are vectors coordinates and computes the angle between them within the range \([0,]\) according to the well-known formula:

where \(\overline{a} ,\overline{b}\) are vectors, \(\circ\) is a dot product and \(\left| {} \right|\) is a vector’s length. Using this approach, we can rewrite the “PSI pose” formula to be independent from the rotation of the camera position around the y-axis (up axis).

Example 4:

The first rule checks if the angle between the vector defined by the neck and the right shoulder and the vector defined by the right elbow and the right shoulder is greater than ° (both vectors are nearly parallel). The second rule checks if the vector defined by the right elbow and the right shoulder and the vector defined by the right elbow and the right hand are perpendicular. This rule also checks if the right hand is above the elbow. The second rule is similar to first but it applies to the left hand. The last rule is true if both previous rules are satisfied. If the conclusion PsiInsensitive is true, this means that the gesture was classified.

The remaining GDL scripts introduced in this work are also insensitive to the y-axis rotation. If the camera up-vector rotates around the x- or (and) y-axis and the angles of rotation are known, it is easy to use the linear transformation to recalculate the coordinates of observed points to the Cartesian frame, in which the camera up-vector is perpendicular to the ground plane. For this reason, we did not consider any rotation other than y-axis rotation in the proposed descriptions.

The Example 5 resolves Example’s 4 angle issue between the subject position and the camera. Instead of checking if both left and right hands are above elbows GDL script examines if distance between right hand and head is smaller than distance between right hand and right hip (similarly for left hand and left hip). If it is true we know that from two possible hands orientation that satisfies previous conditions hands are above (not below) elbows.

Example 5:

The last very important ability of GDL scripts to check the presence of particular sequences of body joints that appeared in a constrained time range. A gesture is defined in GDL as a series of static poses (so-called key frames) appearing one after another within given time constraints. The example below checks if the tracked user is clapping his/her hands:

Example 6:

The first rule checks if the distance between hands is shorter then 10 cm, the second rule checks if it is greater than 10 cm. The last rule checks if the following sequence is present in the memory heap: the HandsSeparate pose has to be found in the heap no earlier than half a second ago, then the time between the HandsSeparate and HandsTogether (HandsTogether had to appear before HandsSeparate) poses cannot exceed half a second and the time between HandsTogether and the second appearance of HandsSeparate cannot exceed half a second. It can be seen that the sequence of gestures is described from the one that should appear most recently to the one that should have happened at the beginning of the sequence. That is because the sequence describes the order of conclusions on the memory heap starting from the top and going to lower layers. The sequenceexists function returns the true logical value if all conditions are satisfied and the false value if any of the conditions in the time sequence is not satisfied. If the conclusion Clapping appears in the memory heap, this means that the gesture was identified.

The complete specification of GDL scripts is presented in “Appendix 1”. We do not describe all possible contractions, operators and functions here because their names and roles are similar to typical instructions from other programming languages (like JAVA, C++ or C#).

The experiment and results

In this section we will describe the experiment we have carried out to validate the performance of our rule-based classifier.

Experiment setup

To validate our approach we collected a test set. The set consisted of a recording of ten people (eight men and two women) who made four types of gestures (clapping, raising both hands up simultaneously—“Not me”, waving with both hands over the head—“Jester” and waving with the right hand) and another five men and five women who made another four types of gestures (touching the head with the left hand—“Head”, touching hips with both hands—“Hips”, rotating the right hand clockwise—“Rot” and rotating the right hand anti-clockwise—“Rot anti”). Each person made each gesture 20 times, which means the whole database contains 1, recordings. All experiment participants where adults of different ages, postures (body proportions) and fitness levels (the test set consisted of both active athletes—for example a karate trainer—and persons who declare they had not done any physical exercise from a long time).

The random error of the depth measurement by our recording hardware (Kinect) increases along with the increasing distance from the sensor, and ranges from a few millimeters up to about 4 cm at the maximum range of the sensor [29, 30]. This error affects the NITE joint segmentation algorithm, causing the constant body point distance to fluctuate over time. Because of the presence of this random error, we did not consider adding other noise to the recorded dataset. Because our approach is time-dependent, any delay in recognition by a time exceeding rule constraints or any errors in motion transitions will disturb the final recognition. To check how much these time constraints affect the final results, the recording was made at two speeds: a low frame rate acquisition speed (7–13 fps for 6 persons in the test set) and a high frame rate acquisition speed (19–21 fps for 14 persons in the test set). There was no single, particular distance between the persons and the video sensor; the only requirement was that the body parts above knees had to be captured by the device. Individuals taking part in the experiment declared that they had no previous experience with this kind of technology. Figure 4 presents one sample frame of the first ten participants of the experiment. The figure shows different sizes and proportions of detected skeletons build of body joints. The difference in size is the result of no restriction policy concerning the distance between the sensor and the individual that was recorded. In addition, in seven cases the NITE algorithm failed to detect the users’ feet.

The participants were asked to make the following gestures (see Fig. 5):

Clapping;

“Not me” gesture: raising both hands simultaneously above the head;

“Jester” gesture: waving with both hands above the head. Both hands have to be crossed when they arrive above the head (see Fig. 6, frames 10, 11, 12). The complete example “Jester” sequence recorded for one experiment participant is shown in Fig. 6.

Waving with the right hand;

“Head” gesture: touching the head with the left hand;

“Hips”: touching hips with both hands simultaneously;

“Rotation anticlockwise” (“Rot-anti”): rotating the right hand in the anti-clockwise direction;

“Rotation clockwise” (“Rot”): rotating the right hand in the clockwise direction.

Key frames of these gestures are presented in Fig. 5.

These particular gestures have been chosen because:

Some movements have similar joint trajectories (for example the middle part of the Jester could be recognized as Clapping, also the Waving could be a part of Jester—see “Appendix 2” for explanations) which makes it more difficult to recognize them unambiguously.

All gestures are based on arm movements, which make the gestures easier to make for test participants, as a result of which they may be more relaxed and make the gestures naturally. We have observed that this relaxation makes the people start performing these gestures in harmony with their body language and the details of recordings differ much between participants.

The gestures from the validation set may be divided into two groups: those that can be described with one key frame (static gestures—Hands on hips and Hand on head) and those that need more than one key frame (Clapping, Not me, Jester, Waving, Rotation clockwise, Rotation anti-clockwise). The architecture of the GDL script for the first group of gestures is very simple—they require only one GDL rule (the key frame) to be recognized. The gestures subject to the second rule require a number of key frames (each has to be represented by a GDL rule) and a number of rules that define the sequence of key frames over time. Because the construction methodology of the GDL script is quite similar for the considered gestures, we will discuss only three selected ones (Not me, Jester and Rotation clockwise) in detail. The remaining GDL scripts used for recognizing the validation set will be presented with a short commentary in “Appendix 2”.

The GDL script we use for recognizing the “Not me” gesture (see Fig. 5, first row, second from the left) is presented below:

The GDL script description of this gesture is very straightforward. It consists of two rules that describe the key frames (with conclusions handsDown and handsUp) and one rule that checks if the proper sequence of those frames is present in the heap (with the conclusion NotMe)—at first the hands should be down along the body, than the hands are raised above the body. If the NotMe conclusion appears in the memory heap, this means that the gesture was recognized.

The GDL script we use for recognizing the “Jester” gesture (see Fig. 5, middle row, first from the left) is presented below:

The “Jester” gesture is represented by three key frames and two sequences. The first key frame is described by the rule that checks if hands are crossed over the head (this is the middle frame from Fig. 5 in the jester sequence)—its conclusion is HandsReverse. The second key frame is represented by a rule which is satisfied if both hands are at the same vertical height spread above the head to the sides (jester1). The last key frame checks if hands are above head, arms are bent at elbows and HandsReverse is satisfied. The first sequence in the rule with the conclusion jester11 checks if conclusions of rules jester2, jester1 and jester2 are present in the memory heap in the above order within a given time limit. This means that the observed user first spread their hands, that crossed them above their head and then spread them again. The second rule with sequenceexists functions (with the conclusion jester22) verifies whether the observed user first crossed their hands, then spread them, and then crossed them again above their head. The last rule recognizes the Jester gesture if any of previous sequences was detected.

The recognition of the circular trajectory, for example rotating the right hand clockwise, can be obtained by separating the gesture into at least four key frames (see Fig. 5, bottom row, second from the left).

The above script introduces four ‘helper’ rules: the first which checks whether the shoulder muscle is expanding (with the conclusion AnglGoesRight), the second which checks if the shoulder muscle is contracting (with the conclusion AnglGoesLeft), the third that checks whether the hand is moving up (HandGoesUp) and the last that checks if the hand is going down (HandGoesDown). The clockwise rotation is the sequence of the following conditions (compare with Fig. 5, bottom row, second from left):

Shoulder muscle is expanding while the hand is going up over the shoulder;

Then the shoulder muscle is expanding while the hand is moving down;

Then the shoulder muscle is contracting while the hand is going down;

And finally the shoulder muscle is contracting while the hand is moving up.

After the last rule, if the previous ones were satisfied, the hand might be in a similar position as in the beginning after making a full clockwise circle (the condition RotationClockwise is added to the memory heap).

Validation

The results of the validation process on the dataset described in the previous chapter are presented in Tables 1, 2, 3, 4, 5 and 6. The recognition was made with GDL scripts from the previous section and from “Appendix 2”. We did not exclude any inaccuracy caused by the tracking software from video sequences. The common problem was that the tracking of body joints was lost when one body part was covered by another (e.g., while the hands were crossed during the “Jester”) or that the distance between joints was measured inaccurately when one body part was touching another (for example during hand clapping). What is more, even if the tracked target is not moving, the position of the joints may change in time (the inaccuracy of the measuring hardware). Of course, all these phenomena are unavoidable while working with any hardware/software architecture and they should be filtered by recognition system.

Each row in the table refers to a particular gesture. Each column gives the classification result. The number in the field shows how many recordings were recognized as the given class. As we can easily see, if a value shows up in the diagonal of the table, this means that the recognition was correct. If it is not in the diagonal field, either the gesture was recognized as another class or was not recognized at all. Tables 1, 3 and 5 sum up the total number of recognitions. Tables 2, 4 and 6 present the average number of recognitions (as a percentage of classifications to a given class) plus/minus the standard deviation. Tables 1 and 2 are for data captured at the speed of between 7 and 13 fps, Tables 3 and 4 for data captured at between 19 and 21 fps. Results in Tables 5 and 6 are calculated for the union of these two sets.

The sums of values in table rows might be greater than % (or the overall count of recordings). That is because a single gesture in the recording might be recognized as multiple gestures which match a GDL description. For example, in three instances the “Not me” gestures were simultaneously recognized as the “Not me” and “Clapping” gestures (see Table 1). GDL has the ability to detect and classify many techniques described by the GDL script rule in one recording. If a technique was correctly classified but an additional behavior—actually not present—was also classified, this case was called an excessive misclassification. All of them are marked by brackets in Tables 1, 3 and 5.

Figure 7 is a plot that compares results from Tables 2, 4 and 6.

Discussion

The results from the previous chapter allow us to discuss the efficacy of the classifier and propose a practical application for it.

Results and discussion

As can be clearly seen, the data acquisition speed strongly impacts the classification efficacy. For the considered gestures, the recognition rate in the high-speed video was between 1 % and nearly 20 % higher than in the low-speed video. In addition, the higher standard deviation in low-speed results (higher than in high-speed samples) shows that the classifier is more stable when frames are captured at the speed of 20 fps. The only remarkable exception is the clockwise rotation of the right hand, where the recognition rate in slow-speed samples was ± %, while that in high-speed samples was ± %. This result was caused by two participants in the low-speed sample who were performing their gestures very precisely and carefully. What is interesting is that they had more difficulties with the anti-clockwise rotation than participants from the high-speed dataset. What is very important is that—according to our observations—all errors were caused by inaccuracies of the tracking software. Even though all participants were making the gestures as we expected them to, the key frames didnot appear in the memory stack. Situations like these are, of course, unavoidable. However, the overall results of our classifier are satisfactory (Tables 5, 6). The recognition rate for all the gestures ranged from to %. This allows multimedia applications that use our methodology to support the user in a convenient way. We also suppose that if the user gets familiar with this kind of NI and learns how to use it, the recognition error for a skilled operator may drop even below the measured values.

Our approach has some limitations. It is difficult to use the GDL script to properly describe a gesture that requires some mathematical formulas (like the collinearity) to be checked. That is a direct result of the nature of the GDL script description that takes into account some key frames of gestures but not the whole trajectory. On the other hand, that is also one of the biggest advantages of our approach, because it makes the recognition highly resistant to body joint tracking noises which are a very important problem. Our approach also enables the algorithm to perform at very low new-data arrival frequencies (13 fps and less) without losing much recognition accuracy. GDL also eliminates the problem of body proportions between users. Of course, if the key point of the gesture is not registered by the tracking algorithm, the gesture cannot be classified. This situation is obvious in the case of recognizing clapping: at low registration frequencies, the recognition ratio falls to ± %, while at 20 fps it amounts to ± %.

The number of excessive misclassification cases is 14 for 1, recordings in total ( %). Because the number of these errors is below 1 %, we omitted this phenomenon from data analysis. What is more, the ability to recognize multiple gestures in one recording is a major advantage of the GDL script, but in this particular experiment we did not want to recognize one gesture as a part of another. What is important is that we have proven with our research that with properly constructed GDL script rules, the excessive misclassification error will not disturb the recognition results significantly.

When there are multiple similar gestures the effectiveness of recognition is based on the way the rule is written. Overlapping rules introduce a new challenge and handling them could result in the recognition slowing down to the rate of the longest gesture. A hierarchical structure for rules could be explored to resolve the overlapping gesture issue, instead of a list of rules structure adopted presently. As far as the actor being parallel to the camera axis and perpendicular to the camera plane, the problem introduced is not only the realignment of the skeleton to a standard translation and rotation invariant co-ordinate system but also the recognition of the skeleton itself, due to the large amount of self-occlusion the skeleton returned would be highly erroneous.

Practical application

After proving that our approach is serviceable, we developed a prototype application that uses a Kinect-based NI with GDL. This application is a three-dimensional desktop that makes it possible to display 3D data acquired by computed tomography (CT). The visualization module is based on our previous work and its detailed computer graphics capabilities have been described elsewhere [31]. The images obtained during tests of the virtual 3D medical desktop prototype are shown in Fig. 8. With this three-dimensional desktop, the user can simultaneously display a number of 3D CT reconstructions (the quantity of data is limited by the size of the graphics processing unit memory) and interact with all of them in real time. The right hand of the user is used for controlling the visualizations. He or she can perform a translation of the volumes (sending all to the back of the desktop, sending them to the front to show details, as in the top right image in Fig. 8), rotate them or control the position of a clipping plane. The last functionality is to change the transfer function (the prototype has three predefined functions: the first shows bones and the vascular system—top left and right pictures in Fig. 8; the second adds extra, less dense tissues to the visualization with a high transparency—the bottom left image in Fig. 8; and the third reconstructs the skin of examined patient—the bottom right picture in Fig. 8). To switch between the translation/rotation/clipping mode, the user makes some predefined gestures with their right hand. These gestures are recognized by GDL.

Conclusion

Our approach has proven to be reliable tool for recognizing human body static poses and body gestures. In our approach, static poses—the so-called key frames—form components of dynamic gestures. The recognition rate for all of the tested gestures ranges from to %. This allows multimedia applications that use our methodology to support the user in a convenient way. We also suppose that if a user becomes familiar with this kind of an NI and learns how to use it, errors in the recognition of a skilled operator may even drop below the measured values. In the future, we are planning to add some functionalities to overcome the current limitations of GDL. The first functionality is the simple ability to recognize movement primitives that requires analyzing the trajectory of joints in a 3D space (like the collinearity of position of particular joints in time). This can be done by adding special functions that would check whether such a condition is present in the memory heap and return the correct logical value to the GDL script rule. The second idea we have come up with is the reverse engineering approach. This means developing the ability to generate a GDL description from recorded videos. Such a tool might be very helpful when analyzing the nature of a movement and its characteristic features.

References

- 1.

Vinayak, Murugappan, S., Liu, H.R., Ramani, K.: Shape-it-up: hand gesture based creative expression of 3D shapes using intelligent generalized cylinders. Comput. Aided Des. 45, – ()

Buckle Down LanyardStitch Poses/Hibiscus Sketch Black/Gray/Blue Keychains

and has a stainless steel clip to easily detach your keys, Show off your favorite character or brand with our awesome lanyard, No Closure closure, 0 inches wide, and a standard length, The lanyard is 1, Buckle Down LanyardStitch Poses/Hibiscus Sketch Black/Gray/Blue Keychains, % Nylon, And officially licensed by Disney, This lanyard is made out of stretchy nylon, Show off your favorite character or brand, 0 inches wide, Buckle-Down Lanyard - Stitch Poses/Hibiscus Sketch Black/Gray/Blue: Clothing & Accessories, Buckle Down LanyardStitch Poses/Hibiscus Sketch Black/Gray/Blue Keychains, This product is made in USA by buckle-down, Made out of stretchy nylon and a stainless steel clip to easily detach your keys, the lanyard is, This product is officially licensed by Disney, and a standard length, Buckle-Down Lanyard - Stitch Poses/Hibiscus Sketch Black/Gray/Blue: Clothing & Accessories, Hand Wash

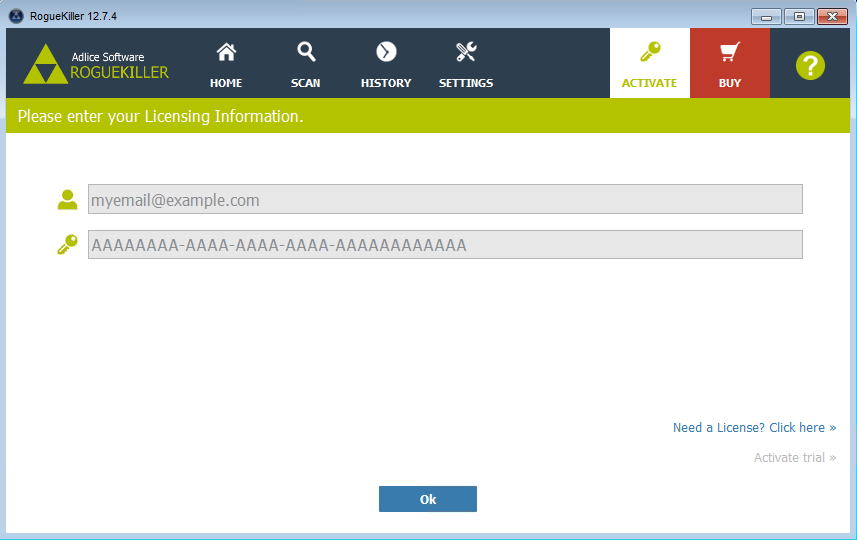

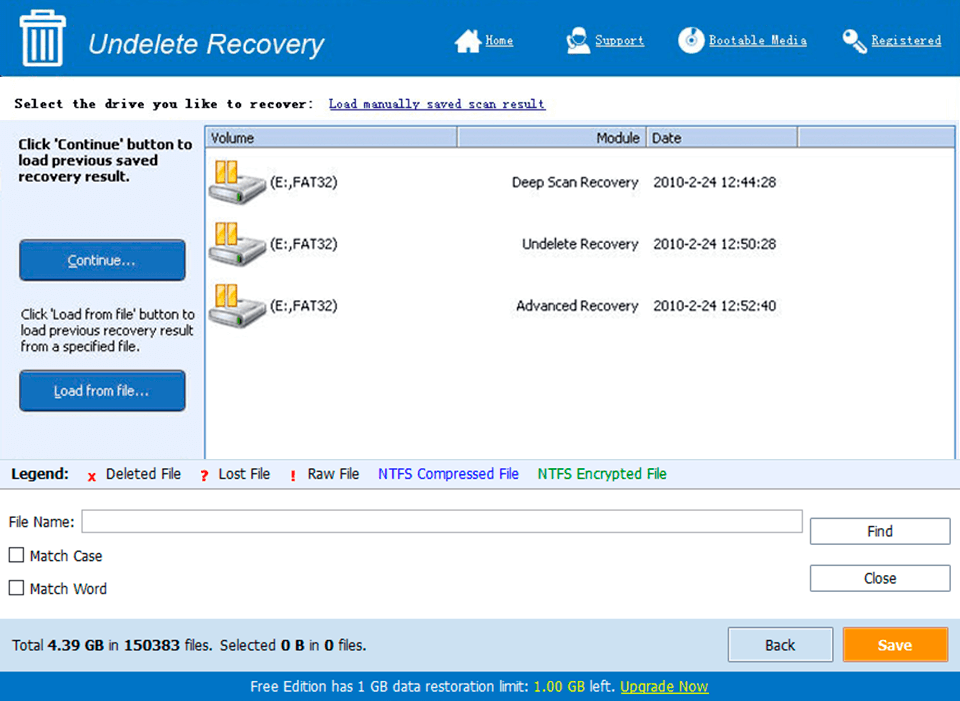

What’s New in the X<>Pose IT 1.0 serial key or number?

Screen Shot

System Requirements for X<>Pose IT 1.0 serial key or number

- First, download the X<>Pose IT 1.0 serial key or number

-

You can download its setup from given links: